Process Intensification for Sustainable Chemistry: Enhancing Efficiency and Green Metrics in Biomedical Research

This article provides a comprehensive analysis of Process Intensification (PI) as a transformative paradigm for advancing sustainable chemistry in pharmaceutical and biomedical research.

Process Intensification for Sustainable Chemistry: Enhancing Efficiency and Green Metrics in Biomedical Research

Abstract

This article provides a comprehensive analysis of Process Intensification (PI) as a transformative paradigm for advancing sustainable chemistry in pharmaceutical and biomedical research. It explores foundational principles, including the four domains of intensification—spatial, thermodynamic, functional, and temporal—and their critical role in minimizing environmental impact. The content details innovative methodologies and applications, from reactive distillation and continuous flow reactors to biocatalytic processes, supported by real-world case studies in biotherapeutics manufacturing. It further addresses key challenges in scaling and control, offering troubleshooting strategies and optimization techniques using advanced control systems and digital twins. Finally, the article establishes a framework for validation through green chemistry metrics, techno-economic analysis, and comparative assessments against traditional processes, providing researchers and drug development professionals with practical insights for implementing PI to achieve superior sustainability and economic outcomes.

The Principles and Drivers of Process Intensification in Green Chemistry

Process Intensification (PI) represents a transformative approach in chemical engineering and process design, aimed at dramatically improving process efficiency, sustainability, and economics. It fundamentally rethinks how processes are designed and operated to achieve significant improvements in resource utilization, equipment size reduction, and environmental performance [1]. The core philosophy moves beyond incremental optimization to achieve revolutionary improvements through novel equipment, processing methods, and system-level integration.

The evolution of PI has reached a new stage termed Process Intensification 4.0 (PI4.0), which incorporates data-driven approaches and the design principles of Industry 4.0. This framework utilizes artificial intelligence and machine learning to accelerate equipment design, enhance predictive control, and streamline process optimization, thereby enabling system-level transformations toward more sustainable and circular processes [2]. For researchers in sustainable chemistry and drug development, PI offers pathways to develop more compact, efficient, and environmentally friendly manufacturing processes that align with green engineering principles and circular economy goals.

Quantifying Process Intensification: Evaluation Methods

Evaluating the success of PI implementation requires robust methodologies that can compare conventional and intensified processes across multiple criteria. The Intensification Factor (IF) provides a straightforward, quantitative decision-making tool that lumps both quantitative and qualitative factors into a single, easy-to-interpret number [3].

Table 1: Factors for Calculating the Intensification Factor

| Evaluation Category | Specific Metrics | Weighting Considerations |

|---|---|---|

| Economic Factors | Capital expenditure (CAPEX), Operational expenditure (OPEX), Return on investment (ROI) | Typically high weighting in business decisions |

| Technical Factors | Energy consumption, Conversion/Selectivity, Process steps reduction, Equipment footprint | Core engineering performance indicators |

| Environmental Factors | CO~2~ emissions, Waste generation, Resource efficiency | Increasingly important for sustainability goals |

| Operational Factors | Flexibility, Safety, Control complexity, Reliability | Impacts practical implementation and risk |

The calculation method is based on simple arithmetic operations, making it robust for cases with limited information. The step-by-step approach involves:

- Identifying relevant factors for the specific process being evaluated

- Assigning performance values for both conventional and intensified processes

- Applying weighting factors based on expert judgment and project priorities

- Calculating the overall Intensification Factor using the established formula

The final IF value provides a clear indication: if larger than 1, the intensified alternative is superior to the existing process; if smaller than 1, the conventional process remains better [3]. This method serves not only experts in PI but also helps convince stakeholders outside the discipline and can be effectively used in educational settings for training young professionals in innovation strategies.

Implementation Protocols for Process Intensification

Protocol 1: Systematic PI Implementation Framework

Implementing PI requires a structured methodology to ensure technical and economic success. The following protocol outlines a comprehensive approach:

Step 1: Process Analysis and Baseline Establishment

- Conduct thorough analysis of existing process to identify limitations and improvement opportunities

- Establish quantitative baseline metrics for energy consumption, conversion rates, separation efficiency, and environmental impact

- Document current process topology, operational constraints, and control strategies [1]

Step 2: PI Technology Screening and Selection

- Evaluate applicable PI technologies based on process requirements and constraints

- Consider integration of unit operations (e.g., reaction-separation), alternative energy sources, and novel equipment designs

- Assess feasibility of technologies such as reactive distillation, dividing wall columns, microwave-assisted reactions, or membrane separations [1]

Step 3: Intensification Factor Calculation

- Apply the IF methodology to compare conventional and proposed intensified processes

- Incorporate economic, technical, environmental, and operational factors specific to the application

- Use the calculated IF to guide decision-making and technology selection [3]

Step 4: Control Strategy Development

- Design appropriate control systems capable of handling the increased complexity and nonlinearity of intensified processes

- Implement advanced control strategies such as Model Predictive Control (MPC) or AI-driven methods where traditional PID control is inadequate [1]

- Develop real-time optimization capabilities to maintain optimal performance under varying conditions

Step 5: Experimental Validation and Scaling

- Conduct laboratory-scale experiments to validate proposed intensification approach

- Use iterative design strategy supported by digital twins and machine learning algorithms to accelerate development [2]

- Establish scale-up protocol considering equipment design limitations and operational flexibility requirements

Protocol 2: Electrification-Based Process Intensification

Electrification represents a major pathway for PI in the chemical industry, supporting decarbonization goals when coupled with renewable energy sources [4]. This protocol details methodology for implementing electrification technologies:

Step 1: Technology Matching and Selection

- Identify thermal processes suitable for electric heating technologies (e.g., electric furnaces, induction heating, microwave-assisted heating)

- Evaluate separation processes amenable to electrification (e.g., membrane separations, heat pump-assisted distillation)

- Assess reaction systems for electrochemical synthesis or plasma-assisted reactions [4]

Step 2: Process Integration and Design

- Design integrated process layouts that maximize benefits of electrification

- Implement heat integration and energy recovery systems to optimize efficiency

- Develop modular designs where applicable to enhance flexibility and reduce capital costs

Step 3: Renewable Energy Integration

- Assess availability and reliability of low-carbon electricity sources

- Design systems for handling intermittency of renewable energy where applicable

- Implement energy storage solutions to ensure continuous operation

Step 4: Performance Validation

- Conduct experimental trials to validate performance under electrified operation

- Measure key performance indicators including energy efficiency, conversion rates, and product quality

- Compare results with conventional processes using the IF methodology

Table 2: Performance Comparison of Electric Heating Technologies

| Technology | Typical Efficiency (%) | Operating Temperature Range | Best-Case Efficiency (%) | Representative Applications |

|---|---|---|---|---|

| Electric Resistance Furnaces | 85-95 | Medium to High | >95 | Petrochemical cracking, Ceramics processing |

| Induction Heating | 65-85 | Medium to Very High | 90 | Metal processing, Catalytic reactions |

| Microwave-Assisted Heating | 50-80 | Low to Medium | 85 | Polymerization, Green chemistry, Ceramics |

| Conventional Fuel Furnaces | 23-70 (with 50-70% heat loss) | Very High | 70 | Various industrial heating processes |

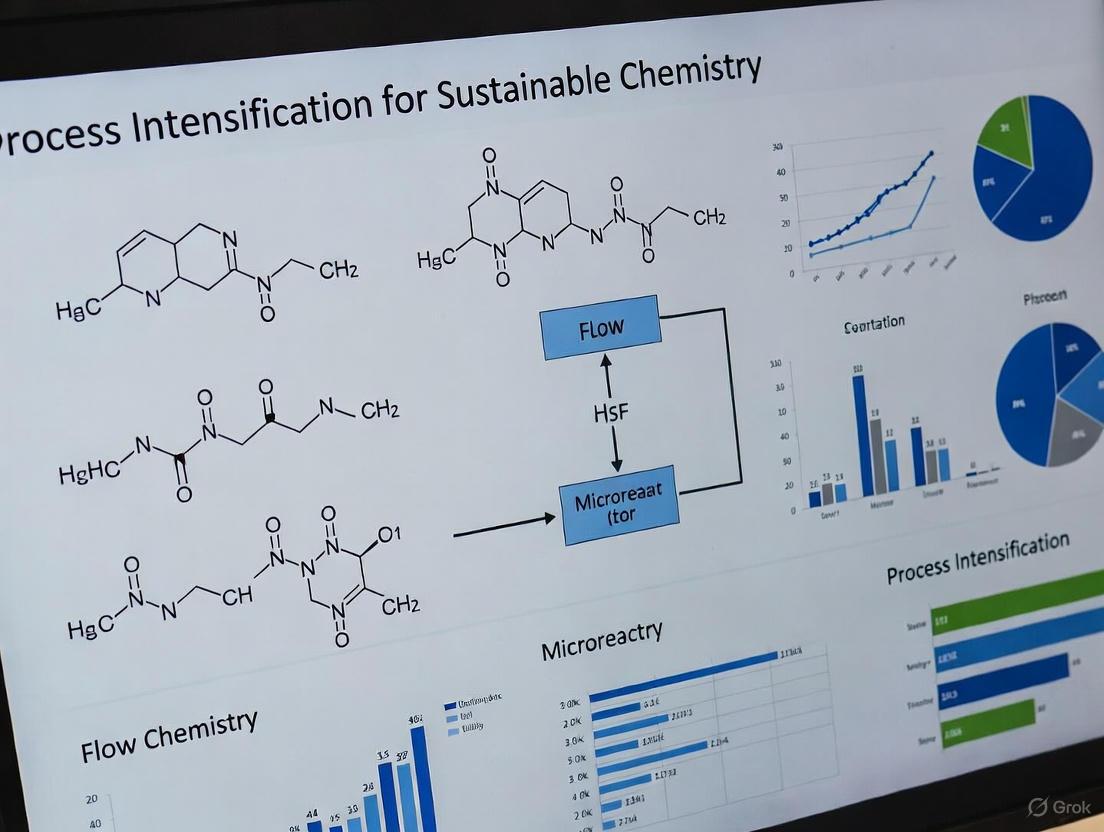

Visualization of Process Intensification Workflows

PI Implementation Decision Framework

Process Intensification 4.0 Methodology

Research Reagent Solutions for PI Experimentation

Table 3: Essential Research Reagents and Materials for PI Experiments

| Reagent/Material | Function in PI Research | Application Examples |

|---|---|---|

| Heterogeneous Catalysts | Enable integrated reaction-separation systems; improve selectivity in intensified reactors | Reactive distillation, Membrane reactors |

| Ionic Liquids | Serve as green solvents and catalysts in multifunctional reactors; enhance separation efficiency | Extractive distillation, Absorption intensification |

| Structured Packings | Maximize surface area for heat and mass transfer in compact equipment | Dividing wall columns, Intensified separation |

| Advanced Membrane Materials | Enable selective separations with low energy requirements; facilitate process integration | Membrane reactors, Hybrid separation systems |

| Microwave-Susceptible Catalysts | Enhance reaction rates and selectivity under microwave irradiation | Microwave-assisted reactions, Green chemistry |

| Electrocatalytic Materials | Enable electrochemical synthesis pathways for process electrification | CO~2~ conversion, Electrosynthesis |

| Thermomorphic Solvents | Facilitate reaction and separation through temperature-dependent phase behavior | Biphasic catalytic systems, Reaction intensification |

Process Intensification represents a fundamental shift from conventional process design toward more sustainable, efficient, and compact manufacturing systems. The methodologies, protocols, and tools presented in these application notes provide researchers and development professionals with practical frameworks for implementing PI in various contexts, including pharmaceutical development and sustainable chemistry.

The integration of advanced evaluation methods like the Intensification Factor, combined with emerging technologies in electrification and Process Intensification 4.0, creates powerful pathways for achieving dramatic improvements in process efficiency and sustainability. By adopting these structured approaches and leveraging the latest developments in data-driven optimization, researchers can successfully navigate the transition from paradigm shift to practical reality in process intensification.

Process Intensification (PI) represents a transformative approach in chemical engineering, aimed at developing radically innovative equipment and processing methods that can bring substantial improvements in efficiency, cost, product quality, safety, and health over conventional process designs based on unit operations [5]. At its philosophical core, PI encourages engineers to move beyond incremental optimization and instead radically rethink how reactions and separations should occur, with the ultimate goals of creating smaller and more compact plants, lowering energy consumption and operational costs, reducing waste and emissions, enabling safer processes with smaller hazardous inventories, and accelerating scale-up from laboratory to industrial scale [6].

The conceptual foundation of modern PI rests on four governing principles first outlined by van Gerven and Stankiewicz in their seminal work "The Fundamentals of Process Intensification" [5]. These principles provide a systematic framework for designing intensified processes by focusing on molecular-level interactions, uniformity of processing conditions, optimization of fundamental driving forces, and synergistic integration of operations. When implemented effectively, these principles enable chemical manufacturers to achieve dramatic improvements in process efficiency and sustainability performance, often reducing plant size by up to 100-fold while simultaneously slashing capital costs, energy consumption, and carbon footprints [6]. This application note explores these four principles in detail within the context of sustainable chemistry research, providing both theoretical foundations and practical implementation guidance for researchers and drug development professionals.

The Four Governing Principles

Principle 1: Maximize Molecular Effectiveness

The first principle of Process Intensification focuses on maximizing the effectiveness of molecular events by fundamentally altering reaction rates through precise management of molecular collision frequency, energy transfer, and timing [5]. In conventional chemical processing, molecular interactions often occur inefficiently due to poor mixing, inadequate energy transfer, or suboptimal reaction pathways. PI addresses these limitations through innovative reactor designs and processing techniques that enhance the probability of successful molecular interactions leading to desired products.

Key Applications and Technologies:

- Microreactors: These systems feature channels smaller than 1 mm where reactions occur under tightly controlled conditions, providing excellent heat and mass transfer characteristics that lead to faster reaction rates and higher selectivity [6]. The extremely high surface-to-volume ratios in microreactors enhance molecular collision frequency while enabling precise control over residence time distribution.

- Alternative Energy Inputs: Non-conventional activation methods such as microwave-assisted reactors enable faster heating and selective activation of specific molecular pathways, while ultrasound reactors utilize intensified mixing and cavitation effects to enhance molecular interactions [6]. Plasma reactors generate reactive species that unlock reaction pathways often impossible under conventional conditions.

- Advanced Mixing Technologies: Static mixers, rotating packed beds, and other high-shear devices create intense mixing conditions that reduce diffusion limitations and ensure uniform molecular experiences, thereby increasing the probability of effective molecular collisions [7].

Principle 2: Ensure Uniform Molecular Experience

The second PI principle emphasizes providing each molecule with a uniform processing experience by minimizing velocity, temperature, and concentration gradients across the reaction environment [5]. In traditional chemical reactors, heterogeneous conditions lead to varying product quality, reduced selectivity, and inefficient resource utilization. PI technologies address this challenge by creating highly controlled environments where all molecules experience nearly identical processing conditions throughout their residence in the system.

Implementation Strategies:

- Advanced Reactor Designs: Microreactors and structured reactors maintain precise temperature control and concentration profiles, ensuring that all molecules undergo nearly identical reaction conditions regardless of their position within the reactor or time of entry [6]. This uniformity is particularly valuable in pharmaceutical manufacturing where consistent product quality is paramount.

- Process Analytical Technology (PAT): Implementation of real-time monitoring and control systems enables continuous adjustment of processing parameters to maintain uniform conditions despite external disturbances or feed variations [1]. These systems utilize in-line sensors, spectroscopic probes, and automated control algorithms to detect and correct deviations from optimal processing conditions.

- Enhanced Heat and Mass Transfer: Technologies such as heat-integrated reactors, spinning disk reactors, and oscillatory baffled reactors significantly improve transfer rates to eliminate hot spots, cold spots, or concentration gradients that lead to non-uniform molecular experiences [7]. These systems are particularly valuable for highly exothermic or endothermic reactions where thermal management is challenging.

Principle 3: Optimize Driving Forces and Maximize Surface Areas

The third principle involves optimizing the fundamental driving forces for heat and mass transfer while simultaneously maximizing the specific surface areas available for these transfer processes [5]. In conventional equipment, transfer rates are often limited by inadequate interfacial area or suboptimal driving forces. PI addresses these limitations through innovative designs that enhance both factors simultaneously.

Technical Approaches:

- Structured Packing and Internals: Advanced column internals, structured catalysts, and engineered surfaces provide dramatically increased surface areas for heat and mass transfer while maintaining optimal flow distributions and minimizing pressure drops [1]. These technologies are particularly valuable in separation processes such as distillation, absorption, and extraction.

- Membrane Technology: Integration of selective membranes within reactor systems maximizes concentration driving forces by continuously removing products or introducing reactants at optimal rates [6]. Membrane reactors are especially effective for equilibrium-limited reactions where continuous product removal drives reactions toward completion.

- Centrifugal and Gravity-Based Enhancements: Rotating packed beds and other equipment utilizing centrifugal forces can achieve mass transfer coefficients orders of magnitude higher than conventional systems by creating extremely thin films and renewing interfaces continuously [7]. These technologies are particularly valuable for gas-liquid systems with slow reaction kinetics.

Principle 4: Maximize Synergistic Effects

The fourth principle focuses on maximizing synergistic effects between partial processes by strategically combining multiple unit operations or phenomena within a single apparatus [5]. Rather than treating chemical processes as sequences of discrete steps, PI seeks to integrate operations to create synergistic effects where the combined performance exceeds the sum of individual components.

Integration Strategies:

- Multifunctional Reactors: Reactive distillation columns combine chemical reaction and product separation within a single unit, enabling continuous removal of products that drives equilibrium-limited reactions toward completion while reducing capital costs and energy requirements [7] [6]. Eastman Chemical Company's methyl acetate production process exemplifies this approach, where 11 conventional process steps were reduced to a single reactive distillation column [5].

- Hybrid Separation Systems: Combining different separation techniques such as distillation with adsorption, extraction, or crystallization can overcome limitations of individual methods while reducing energy consumption and improving product purity [1]. Dividing-wall columns represent another successful application of this principle, enabling separation of three or more components in a single column with reduced energy requirements.

- Process Integration with Energy Management: Heat-integrated reactors and separation systems utilize process streams for heating or cooling needs, significantly reducing external utility requirements and improving overall energy efficiency [8]. The HDA process case study demonstrates how waste heat recovery can achieve 84% energy savings through strategic integration.

Quantitative Performance Metrics

Table 1: Comparative Performance Metrics of Intensified vs. Conventional Processes

| Performance Indicator | Conventional Process | Intensified Process | Improvement | Application Context |

|---|---|---|---|---|

| Energy Consumption | Baseline | 38-84% reduction | 38-84% savings | Dimethyl carbonate production; HDA process [5] [8] |

| Equipment Footprint | Multiple units | Single multifunctional unit | Up to 100x reduction | Reactive distillation [6] |

| Conversion/Selectivity | Equilibrium-limited | Enhanced via integration | 70% to 88.9% conversion | HDA process with hydrogen recycle [8] |

| Capital Cost (CAPEX) | Baseline | 20-80% reduction | Significant savings | Reactive distillation systems [6] |

| Operating Cost (OPEX) | Baseline | Proportional to energy savings | Substantial reduction | Most intensified systems [6] |

| Reaction Time | Hours to days | Seconds to minutes | Order of magnitude reduction | Microreactor systems [7] |

Table 2: Sustainability Impact Alignment with UN Sustainable Development Goals

| UN Sustainable Development Goal | PI Contribution | Quantitative Impact | Relevant Technologies |

|---|---|---|---|

| Goal 6: Clean Water and Sanitation | Reduced water waste and improved water management | 50% of water use in European chemical industry addressed | Closed-loop systems, membrane filtration, water recycling [5] |

| Goal 7: Affordable and Clean Energy | Decreased energy consumption and renewable energy integration | 38.33% energy savings in dimethyl carbonate production | Hybrid heat integration, continuous processing [5] |

| Goal 9: Industry, Innovation, Infrastructure | Modernization of outdated industrial infrastructure | Significant utility requirement reduction | Multifunctional reactors, compact equipment [5] |

| Goal 12: Responsible Consumption and Production | Enhanced process safety and minimized waste generation | Reduced waste and byproduct generation | Continuous processing, integrated systems [5] |

| Goal 13: Climate Action | Accelerated renewable energy use and compact equipment | Reduced COâ‚‚ emissions through electrification | Electrochemical reactors, electrically heated micro-reactors [5] |

Experimental Protocol: Process Intensification of Hydrodealkylation (HDA) for Benzene Production

Background and Objective

This protocol details the implementation of PI principles to the conventional hydrodealkylation (HDA) process for benzene production through heat integration and hydrogen recycle optimization [8]. The objective is to demonstrate how applying the four governing principles of PI can significantly improve energy efficiency, conversion rates, and economic viability in a well-established industrial process. The intensification strategy focuses on maximizing molecular effectiveness through improved reaction conditions, ensuring uniform molecular experience via optimized reactor design, optimizing driving forces through heat integration, and maximizing synergy via process integration.

Materials and Equipment

Table 3: Research Reagent Solutions and Essential Materials

| Material/Equipment | Specifications | Function/Purpose | Supplier/Alternative |

|---|---|---|---|

| Process Simulation Software | Aspen HYSYS V12.2 or equivalent | Process modeling, energy balance calculation, and optimization | AspenTech [8] |

| Toluene Feed | High purity (>99.5%) | Primary reactant for benzene production | Standard chemical supplier |

| Hydrogen Gas | High purity (>99.9%) | Reactant for dealkylation reaction | Gas supplier or electrolysis unit |

| Cryogenic Separation Unit | Capable of -100°C to -150°C | Hydrogen recovery and purification | Custom or modular unit |

| Heat Exchangers | Shell and tube or plate type | Waste heat recovery for feed preheating | Standard process equipment supplier |

| Catalyst | Conventional HDA catalyst (e.g., Cr₂O₃/Al₂O₃) | Promotion of dealkylation reaction | Catalyst manufacturer |

Procedure

Step 1: Process Modeling and Baseline Establishment

- Develop a comprehensive process model using Aspen HYSYS software incorporating all unit operations of the conventional HDA process: reactor, waste heat boiler (WHB-01), partial condenser (PC-01), and separation units [8].

- Establish baseline performance metrics including toluene conversion rate (typically ~70%), hydrogen consumption (125 kmol/h), toluene feed rate (196 kmol/h), and energy requirements per unit of benzene produced.

- Validate the model against literature data or experimental results to ensure accuracy of subsequent intensification modifications.

Step 2: Hydrogen Recycle Loop Implementation (Principles 1 & 4)

- Modify the process flow sheet to incorporate a hydrogen recycle loop from the purge gas stream back to the reactor inlet [8].

- Integrate a cryogenic separation unit operating at approximately -120°C to recover hydrogen from the methane byproduct stream.

- Optimize the recycle ratio to balance hydrogen utilization efficiency with separation energy requirements, targeting a reduction in fresh hydrogen feed from 125 kmol/h to 111 kmol/h.

- Implement control systems to maintain stable reactor operation despite recycled stream composition variations.

Step 3: Heat Integration System Design (Principles 2 & 3)

- Identify waste heat sources within the process, particularly from WHB-01 and PC-01 streams [8].

- Design a heat exchanger network to transfer recovered thermal energy to preheat both fresh and recycled toluene feeds prior to reactor introduction.

- Implement temperature control systems to ensure uniform heating across all feed streams and maintain optimal reactor inlet temperatures.

- Calculate expected energy savings (targeting 84% reduction in external heating requirements) and validate through simulation.

Step 4: Process Integration and Optimization

- Integrate the hydrogen recycle and heat integration systems into a unified intensified process flow scheme.

- Optimize operating parameters including reactor temperature and pressure, hydrogen-to-toluene ratio, and heat exchanger approach temperatures to maximize benzene yield and purity.

- Implement advanced process control strategies to manage the dynamic interactions between the integrated units and maintain stable operation under varying feed conditions.

Step 5: Performance Validation

- Operate the intensified process model under steady-state conditions and compare key performance indicators against the conventional baseline.

- Verify achievement of target metrics: increased conversion rate from 70% to 88.9%, reduced hydrogen and toluene consumption to 111 kmol/h for both feeds, and 84% energy savings [8].

- Conduct economic analysis to quantify capital and operational cost savings, including valorization of methane byproduct stream.

Safety Considerations

- Implement adequate safety interlocks for hydrogen handling due to flammability concerns.

- Ensure proper ventilation and gas detection systems for potential hydrogen or benzene leaks.

- Include pressure relief devices and emergency shutdown procedures for cryogenic operations.

- Adhere to all relevant safety protocols for high-temperature and high-pressure operations.

Workflow Visualization

HDA Process Intensification Workflow

Figure 1: Systematic workflow for applying the four PI principles to the HDA process, showing the sequential implementation of each principle from baseline establishment through final performance validation.

Advanced Control Strategy for PI

Figure 2: Control architecture for intensified processes, showing the integration of model predictive control, digital twins, and AI-driven optimization to manage the complexity of integrated unit operations and maintain optimal performance under varying conditions.

Process Intensification (PI) is a practice-driven branch of chemical engineering focused on achieving dramatic enhancements in manufacturing and processing. The core goal is to develop novel apparatuses and techniques that substantially decrease equipment-size/production-capacity ratio, energy consumption, or waste production, resulting in cheaper and more sustainable technologies [9] [10]. A fundamental framework for PI classifies these innovations into four core domains: Spatial, Thermodynamic, Functional, and Temporal [9] [11]. Applying the principles of these domains is critical for identifying PI opportunities that align with the objectives of sustainable chemistry, enabling the design of cleaner, more compact, and energy-efficient processes [12] [13]. This document outlines detailed application notes and experimental protocols for leveraging these domains within sustainable chemistry research, particularly relevant to researchers and drug development professionals.

The Four Core Domains of Intensification

The following diagram illustrates the logical relationships and primary objectives of the four core domains of process intensification.

Diagram 1: The Four Domains of Process Intensification

Spatial Domain

The Spatial Domain focuses on maintaining a controlled structure within equipment to avoid variability in products and achieve dramatic reductions in plant size [9] [11]. This involves redesigning process equipment to create uniformly distributed conditions, which enhances transfer phenomena and reduces diffusion pathways [10].

Exemplar Technology: Microreactors Microreactors are characterized by channel sizes in the micrometer range, where diffusion becomes the dominant mixing mechanism [10]. This design leads to superior control over reaction parameters, resulting in enhanced conversion and selectivity, especially for fast, exothermic reactions [10].

Table 1: Quantitative Performance of Spatial Intensification Equipment

| Equipment | Key Characteristic | Reported Enhancement | Application Example |

|---|---|---|---|

| Microreactors | Channel sizes in micrometers; diffusion-dominated mixing [10]. | Increased conversion and selectivity [10]. | Chemical synthesis, biofuel production [10]. |

| Compact Heat Exchangers | Area densities of 200–10,000 m²/m³; hydraulic diameters <5 mm [10]. | High efficiency heat transfer in a small footprint [10]. | Process heating and cooling [10]. |

| Spinning Disk Reactors | Reactions occur in thin films on a rotating surface [10]. | High heat and mass transfer; small residence times [10]. | Polymerization, precipitation [10]. |

Thermodynamic Domain

The Thermodynamic Domain centers on optimizing energy conversion and transfer to achieve minimal energy loss and emissions [11]. The goal is to reduce process irreversibility, which is the unnecessary dissipation of energy, leading to more sustainable operations [11].

Exemplar Technology: Sonoreactors (Ultrasound) Sonoreactors utilize ultrasound to enhance the rates of chemical reactions and can eliminate or reduce the need for catalysts [10]. The application of ultrasonic frequencies causes rapid vibration of reactant molecules, intensifying molecular interactions and increasing reaction rates without requiring an excess of reactants [10].

Functional Domain

The Functional Domain aims to combine multiple unit operations or functions into a single, smaller number of devices [9] [11]. This integration often overcomes thermodynamic equilibrium limitations and can eliminate the need for energy-intensive recycle streams [14].

Exemplar Technology: Reactive Distillation (RD) Reactive distillation integrates chemical reaction and separation within one apparatus. The continuous removal of a product from the reaction zone shifts the chemical equilibrium forward, enabling higher conversions and selectivities while eliminating the need for a separate reactor and distillation column [10].

Table 2: Performance of Functionally Intensified Processes

| Intensified System | Integrated Functions | Reported Enhancement | Application |

|---|---|---|---|

| Reactive Distillation | Chemical reaction + separation [10]. | Higher conversion/selectivity; up to 50% energy savings [10]. | Esterification, etherification [10]. |

| Multifunctional Reactors (Sorption-Enhanced) | Reaction + product separation (sorption) [10] [13]. | Shifts thermodynamic equilibrium; increases yield; simplifies process [10]. | COâ‚‚ hydrogenation to methane [13]. |

| Heat Exchanger Reactors | Chemical reaction + heat exchange [10]. | Excellent thermal control for fast, exothermic reactions [10]. | Nitration, hydrogenation [10]. |

Temporal Domain

The Temporal Domain introduces an intentional unsteady-state (periodic) operation to improve the performance of a steady-state process [9] [11]. This is particularly relevant for dynamic operation in Power-to-X (PtX) technologies, where processes must adapt to fluctuating renewable energy inputs [14].

Exemplar Concept: Periodic Operation for COâ‚‚ Methanation In this concept, a periodically operated continuous reactor is used with a bi-functional catalytic material for the conversion of COâ‚‚ to renewable natural gas [13]. The dynamic operation can enhance catalyst activity and process efficiency, offering a pathway to operate chemical synthesis processes efficiently with intermittent energy availability [13] [14].

Application Notes & Experimental Protocols

This section provides detailed methodologies for applying the core domains, with a focus on sustainable chemistry applications such as waste valorization and the production of renewable chemicals and fuels.

Protocol 1: Ultrasound-Assisted Extraction of Bioactive Compounds

This protocol details the use of hydrodynamic and ultrasound cavitation (Spatial and Thermodynamic Intensification) for green extraction of (poly)phenols from date palm seeds or citrus waste [12].

3.1.1 Research Reagent Solutions Table 3: Essential Materials for Ultrasound-Assisted Extraction

| Item | Function/Description | Example/Note |

|---|---|---|

| Green Solvents | Extraction medium. | Water, ethanol, or water-ethanol mixtures [12]. |

| Ultrasonication Bath/Probe | Provides ultrasonic energy for cell disruption. | Frequency typically 20-40 kHz [12]. |

| Hydrodynamic Cavitation Reactor | Creates cavitation bubbles for intensive mixing & cell rupture. | Used as an alternative to ultrasonication [12]. |

3.1.2 Workflow Diagram

Diagram 2: Ultrasound Extraction Workflow

3.1.3 Step-by-Step Procedure

- Feedstock Preparation: Dry the date palm seeds (or other biomass) and grind them to a fine powder to increase the surface area for extraction [12].

- Extraction Setup: Mix the powdered biomass with a selected green solvent (e.g., water, ethanol) in a specified liquid-to-solid ratio. This ratio is a critical parameter that limits extraction efficiency and must be optimized [12].

- Intensified Extraction: Subject the mixture to ultrasound-assisted extraction. Key factors to optimize include:

- Ultrasound amplitude/power

- Extraction temperature

- Extraction time

- Solvent composition (e.g., water vs. ethanol favors different (poly)phenols) [12].

- Separation: After extraction, separate the liquid extract from the solid residue via filtration or centrifugation.

- Analysis & Valorization: Analyze the extract for target compound yield (e.g., flavan-3-ols, quercetin). The spent solid residue can be further valorized, for example, as a biosorbent for water remediation or in agricultural applications, contributing to a circular economy [12].

Protocol 2: Sorption-Enhanced Catalytic Process for COâ‚‚ Methanation

This protocol describes a Functionally and Temporally intensified process that combines catalytic reaction and in-situ product separation for efficient COâ‚‚ conversion [13].

3.2.1 Research Reagent Solutions Table 4: Essential Materials for Sorption-Enhanced Methanation

| Item | Function/Description | Example/Note |

|---|---|---|

| Bi-functional Catalyst/Sorbent | Catalyzes the reaction and adsorbs the product. | Ni-based catalyst on zeolite 13X or 5A support [13]. |

| Fixed-Bed Reactor System | Vessel for the intensified process. | Capable of continuous or periodic operation [13]. |

| Gas Flow Control System | Manages feedstock delivery. | Controls flows of Hâ‚‚ and COâ‚‚ [13]. |

3.2.2 Workflow Diagram

Diagram 3: Sorption-Enhanced Process

3.2.3 Step-by-Step Procedure

- Reactor Packing: Load a fixed-bed reactor with the bi-functional catalytic material (e.g., a Ni-impregnated zeolite) [13].

- Reaction-Sorption Cycle: Feed a mixture of CO₂ and H₂ into the reactor under predetermined conditions (e.g., 300-400 °C).

- The catalyst facilitates the hydrogenation of COâ‚‚ to methane (CHâ‚„) and water (Hâ‚‚O).

- Simultaneously, the sorbent material (zeolite) adsorbs the produced water in-situ.

- The removal of water shifts the reaction equilibrium forward according to Le Chatelier's principle, achieving higher conversions in a single pass and potentially surpassing conventional thermodynamic limits [13].

- Sorbent Regeneration (Temporal Operation): After a defined period, the sorbent becomes saturated with water. A periodic regeneration step is then initiated. This typically involves reducing the pressure (pressure-swing) or increasing the temperature (temperature-swing) to desorb the water and regenerate the sorbent [13].

- Product Recovery: The output stream is a high-purity methane product, with the process operating in a cyclic manner between reaction and regeneration steps.

Evaluation Protocol: Calculating the Intensification Factor

A robust method for comparing process alternatives with limited information is to calculate the Intensification Factor (IF), which lumps quantitative and qualitative factors into a single, easy-to-use number [3].

3.3.1 Procedure:

- Define Comparison Factors: Identify a set of

nrelevant factors for comparison between the base case (conventional process) and the intensified alternative. These can include quantitative metrics (e.g., energy consumption, footprint, yield) and qualitative scores (e.g., safety, operational complexity) [3]. - Assign Weights and Scores: For each factor

i, assign a weightw_i(reflecting its importance) and a scoreS_ifor the intensified alternative relative to the base case. A simple scoring can be used:+1if the alternative is superior,-1if inferior, and0if equivalent [3]. - Calculate Intensification Factor: Compute the IF using the formula below. An IF > 1 indicates the new alternative is superior to the base case, while an IF < 1 indicates the opposite [3].

[ IF = \frac{\sum{i=1}^{n} wi Si}{\sum{i=1}^{n} w_i} + 1 ]

Table 5: Example IF Calculation for a New Reactor Design

| Factor (i) | Weight (wáµ¢) | Score (Sáµ¢) | Weighted Score (wáµ¢ * Sáµ¢) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Energy Consumption | 5 | +1 | 5 | ||||||||

| Equipment Footprint | 4 | +1 | 4 | ||||||||

| Product Yield | 5 | +1 | 5 | ||||||||

| Safety | 5 | +1 | 5 | ||||||||

| Operational Complexity | 3 | -1 | -3 | ||||||||

| Sum (Σ) | 16 |

[ IF = \frac{16}{22} + 1 = 1.73 ] Result: The alternative process (IF=1.73) is superior to the base case [3].

Process Intensification (PI) represents a transformative approach in chemical engineering, aiming to enhance efficiency, sustainability, and compactness of industrial processes through integration of unit operations, optimized resource utilization, and minimized equipment size [1]. This paradigm aligns fundamentally with Green Chemistry principles by systematically reducing waste generation, energy consumption, and environmental footprint while improving process safety and economics [15]. The pharmaceutical industry particularly benefits from PI implementation, where traditional batch processes typically generate 25 to 100 kg of waste per kilogram of final product, primarily from solvents and inefficient purification steps [15]. Emerging PI technologies including continuous flow systems, mechanochemistry, and advanced catalysis now enable researchers to achieve dramatic improvements in mass transfer, reaction efficiency, and energy utilization while supporting broader sustainability goals across chemical manufacturing sectors.

Quantitative Performance of Intensified Processes

Table 1 summarizes documented performance metrics for established and emerging process intensification technologies, demonstrating their significant advantages over conventional approaches.

Table 1: Comparative Performance Metrics of Process Intensification Technologies

| Technology | Key Performance Metrics | Conventional Process Baseline | Environmental & Efficiency Benefits |

|---|---|---|---|

| Continuous Flow Chemistry | Energy reduction: 40-90% [15] | Batch reactor energy consumption | Smaller reactors, increased safety, real-time automation |

| Phase Transfer Catalysis | Reaction time: hours → 3 minutes; NaOH usage: excess → stoichiometric [16] | Multiple hours reaction time, large excess alkali | Minimal side products (0.4-1 mol%), near-stoichiometric reagent use |

| Mechanochemistry | Solvent elimination; high yields in solvent-free systems [17] | Traditional solution-phase synthesis | Reduced solvent waste, enhanced safety, novel reaction pathways |

| Membrane-Integrated Reactors | Conversion increase under milder conditions via continuous separation [18] | Equilibrium-limited batch reactions | Reduced energy consumption, continuous operation |

| Bioacatalysis | Single-step vs. multi-step synthesis; high selectivity [19] [20] | Traditional multi-step chemical synthesis | Reduced step count, milder conditions, biodegradable catalysts |

The data demonstrates that PI strategies can deliver substantial improvements in resource efficiency while simultaneously addressing Green Chemistry principles of waste prevention and inherently safer design.

Application Notes: Strategic Implementation Frameworks

PI Implementation Framework for Green Chemistry Goals

Successful alignment of PI with Green Chemistry requires systematic consideration of technological options across multiple implementation domains. The following strategic framework outlines key decision factors:

Process Architecture Selection

- Continuous vs. Batch Processing: Flow chemistry systems offer superior heat and mass transfer characteristics, significantly enhancing safety profiles for exothermic or hazardous reactions while reducing reactor volume requirements by orders of magnitude [15]. Pharmaceutical applications demonstrate 40-90% energy reduction compared to batch processes [15].

- Integration Level: Consolidated unit operations (e.g., reactive distillation, membrane reactors) minimize intermediate handling and purification requirements, directly reducing solvent consumption and waste generation [1].

Catalysis Strategy

- Heterogeneous Catalysis: Solid catalysts facilitate product separation and catalyst reuse, eliminating waste streams associated with homogeneous catalyst systems [18].

- Biocatalysis & Photocatalysis: Enzyme-based systems operate under mild conditions with exceptional selectivity, while photocatalysis enables novel reaction pathways with reduced energy requirements [20].

Solvent System Design

- Solvent-Free Operations: Mechanochemistry via ball milling or reactive extrusion eliminates solvent usage entirely, representing the ultimate in waste prevention [17].

- Aqueous & Benign Solvents: Water-based reaction systems and deep eutectic solvents (DES) provide low-toxicity alternatives to conventional volatile organic compounds [17].

Waste Prevention Protocol: Mechanochemical Synthesis

Mechanochemistry utilizes mechanical energy to drive chemical reactions without solvents, directly supporting Green Chemistry goals of waste prevention and safer synthesis [17].

Diagram: Mechanochemistry Experimental Workflow

Materials & Equipment

- High-energy ball mill (planetary or mixer mill)

- Milling jars (stainless steel, zirconia, or tungsten carbide)

- Milling balls (various sizes and materials)

- Reactant powders

- Inert atmosphere glove box (for air-sensitive reactions)

Experimental Procedure

- Reactant Preparation: Weigh and pre-grind solid reactants to approximately 100-200 µm particle size using a mortar and pestle.

- Loading: Combine reactants in appropriate stoichiometric ratios with milling balls in milling jar. Ball-to-powder mass ratio typically ranges from 10:1 to 50:1 depending on energy requirements.

- Processing: Secure milling jar in ball mill and process for predetermined time (typically 10-120 minutes) at optimal frequency (15-30 Hz). Control temperature if necessary using cooling intervals or cryogenic conditions.

- Monitoring: Periodically stop milling to collect small samples for analysis by FTIR, XRD, or TLC to track reaction progress.

- Product Recovery: Remove product from milling jar, separate from milling balls using sieve, and purify if necessary using minimal solvent washing or sublimation.

Key Applications

- Pharmaceutical synthesis: Solvent-free formation of APIs and intermediates [17]

- Metal-organic frameworks (MOFs) and advanced materials

- Coordination compounds and organometallic complexes

Green Chemistry Benefits

- Complete elimination of solvent waste

- Reduced reaction times compared to solution-based methods

- Novel reaction pathways not accessible in solution

- Enhanced safety through eliminated solvent handling

Process Safety & Efficiency Protocol: Continuous Flow with Phase Transfer Catalysis

This protocol demonstrates the intensification of a heterogeneous dehydrochlorination reaction using continuous flow and phase transfer catalysis, based on recent research achieving dramatic improvements in efficiency and waste reduction [16].

Diagram: Continuous Flow PTC System

Materials & Equipment

- Syringe or piston pumps for precise fluid delivery

- PTFE tubing reactor (1-5 mL volume)

- Static mixer element

- Temperature-controlled heating bath or jacket

- Liquid-liquid membrane separator or gravity separator

- Aqueous sodium hydroxide solution (5-15% w/w)

- Organic phase: β-chlorohydrin substrate in toluene or dichloromethane

- Phase transfer catalyst: Tetrabutylammonium chloride (TBACl) or similar

Experimental Procedure

- System Preparation: Dissolve β-chlorohydrin substrate (e.g., 3-chloro-2-hydroxypropyl neodecanoate) in organic solvent (0.5-1.0 M concentration). Add phase transfer catalyst (1-5 mol% relative to substrate).

- Catalyst Optimization: Screen PTC structures (quaternary ammonium salts, crown ethers) to identify optimal catalyst for specific reaction system.

- Continuous Operation: Load organic and aqueous phases into separate feed reservoirs. Initiate flow through pre-heated reactor system using residence time of approximately 3 minutes at 50-80°C.

- Phase Separation: Direct reactor effluent to liquid-liquid separator for continuous phase separation.

- Product Isolation: Collect organic phase and recover product through standard techniques. Aqueous phase may be recycled to minimize waste.

Performance Metrics

- Reaction time reduction from several hours to 3 minutes [16]

- Sodium hydroxide usage reduced from large excess to near-stoichiometric quantities [16]

- Selectivity to desired epoxide >98.5% with minimal side products [16]

Green Chemistry Benefits

- Dramatic reduction in reaction time and energy consumption

- Near-stoichiometric reagent use minimizing waste

- Continuous operation enabling smaller equipment footprint

- Enhanced safety through reduced inventory of hazardous materials

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents for Green Process Intensification

| Reagent/Catalyst | Function | Green Chemistry Advantage |

|---|---|---|

| Tetrabutylammonium Salts | Phase transfer catalyst for heterogeneous reactions | Enables near-stoichiometric reagent use, reduces reaction time from hours to minutes [16] |

| Deep Eutectic Solvents (DES) | Biodegradable solvents for extraction and reactions | Low toxicity, renewable feedstocks, customizable properties for specific applications [17] |

| Immobilized Lipases | Biocatalysts for esterification and transesterification | High selectivity under mild conditions, biodegradable, reduces energy requirements [18] |

| Nickel-Based Catalysts | Replacement for palladium in cross-coupling | Abundant, inexpensive metal with >75% reduction in COâ‚‚ emissions and waste generation [20] |

| Tetrataenite (FeNi) | Rare-earth-free permanent magnets | Earth-abundant elements, avoids geopolitical and environmental costs of rare earth mining [17] |

| Silver Nanoparticles | Catalysis and antimicrobial applications | Synthesized in water without toxic solvents, enables green nanoparticle production [17] |

| Dhx9-IN-12 | DHX9-IN-12 | DHX9-IN-12 is a potent DHX9 helicase inhibitor (EC50 = 0.917 µM) for cancer research. This product is For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

| Hdac6-IN-26 | HDAC6-IN-26|HDAC6 Inhibitor|For Research Use | HDAC6-IN-26 is a potent, selective HDAC6 inhibitor. This product is for research use only (RUO) and is not intended for diagnostic or therapeutic use. |

Advanced Integration & Digitalization

AI-Enhanced Reaction Optimization

Machine learning and artificial intelligence transform PI implementation by enabling predictive optimization of reaction parameters and sustainability metrics [17] [1].

Implementation Framework

- Data Collection: Utilize high-throughput experimentation to generate comprehensive reaction datasets

- Model Training: Develop machine learning algorithms to predict reaction outcomes based on input parameters

- Multi-Objective Optimization: Simultaneously optimize for yield, selectivity, and green metrics (PMI, E-factor)

- Autonomous Optimization: Implement closed-loop systems for continuous process improvement

Application Example

- Prediction of borylation site-selectivity in complex molecules using hybrid machine learning approaches [20]

- AI-guided discovery of novel mechanochemical reactions and catalysts [17]

- Process mass intensity (PMI) prediction for synthetic route selection [20]

Digital Twin Technology for PI Systems

Digital twins create virtual replicas of intensified processes, enabling real-time simulation, monitoring, and optimization [1].

Implementation Benefits

- Predictive insights for proactive process adjustments

- Virtual testing of control strategies without disrupting operations

- Enhanced decision-making through dynamic simulation

- Reduced downtime through predictive maintenance

Sustainability Integration

- Real-time optimization of energy consumption

- Dynamic environmental impact assessment

- Resource utilization tracking and minimization

The strategic alignment of Process Intensification with Green Chemistry principles presents a powerful pathway toward sustainable chemical manufacturing. Through implementation of the protocols and frameworks outlined in this document, researchers and drug development professionals can achieve substantial improvements in waste prevention, safety, and efficiency. The integration of advanced technologies including continuous processing, alternative energy inputs, and digitalization enables unprecedented levels of process efficiency while minimizing environmental impact. As PI technologies continue to evolve and mature, their systematic implementation will be essential for achieving sustainability targets across the chemical and pharmaceutical industries.

Process Intensification (PI) represents a transformative approach in chemical engineering, defined as "a set of radically innovative process-design principles which can bring significant benefits in terms of efficiency, cost, product quality, safety and health over conventional process designs based on unit operations" [5]. Within the context of sustainable chemistry research, PI emerges as a critical strategy for achieving net-zero emissions and advancing circular economy targets. By fundamentally reimagining process design, PI enables dramatic improvements in resource efficiency, energy consumption, and waste reduction—addressing the core challenges of unsustainable industrial practices [5] [21].

The theoretical foundation of PI rests on four guiding principles established by Van Gerven and Stankiewicz: maximizing the effectiveness of molecular events; ensuring all molecules have a uniform process experience; optimizing driving forces and specific surface areas; and maximizing synergistic effects from partial processes [5] [21]. These principles manifest through practical applications across four domains: spatial (structure), thermodynamic (energy), functional (synergy), and temporal (time) intensification [5]. For researchers and drug development professionals, these principles provide a framework for developing more sustainable chemical processes that align with global sustainability imperatives.

PI Principles and Their Alignment with Sustainability Goals

Foundational Principles

The four foundational principles of PI provide a systematic approach to sustainable process design [5] [21]:

Maximize molecular effectiveness: This principle focuses on altering reaction rates by precisely managing the frequency, energy, and timing of molecular collisions. In practice, this enables researchers to achieve kinetic regimes with higher conversion and selectivity, leading to reduced raw material consumption and waste generation.

Uniform molecular experience: By providing all molecules with similar process conditions through technologies like plug flow reactors with uniform heating, this principle minimizes side reactions and byproduct formation, directly supporting green chemistry objectives.

Optimize driving forces: Through intentional design that maximizes specific surface areas and driving forces for heat and mass transfer (such as microchannel architectures), this principle significantly enhances process efficiency and reduces energy requirements.

Maximize synergistic effects: The strategic integration of multiple unit operations into single apparatuses (e.g., reactive distillation) creates synergistic effects that simplify processes, reduce equipment needs, and minimize resource consumption.

Implementation Framework

These principles translate into practical implementation across four key domains, as illustrated in Figure 1, which provides a conceptual overview of how PI principles and application domains interrelate to support sustainability objectives.

Figure 1. PI Framework for Sustainability - Conceptual diagram showing how PI principles and application domains interrelate to support sustainability objectives.

Quantitative Sustainability Benefits of PI Technologies

The implementation of PI strategies generates measurable improvements across multiple sustainability metrics, supporting both net-zero and circular economy targets. Table 1 summarizes documented benefits across key industrial applications.

Table 1. Quantitative Sustainability Benefits of PI Applications

| PI Technology | Application | Sustainability Benefit | Quantitative Impact | Reference |

|---|---|---|---|---|

| Reactive Distillation | Methyl acetate production | Process simplification & efficiency | Reduction from 11 process steps to 1 column | [5] |

| Hybrid Heat Integration | Dimethyl carbonate production | Energy savings | 38.33% reduction in energy consumption | [5] |

| Continuous Processing | General chemical production | Waste reduction | Decreased byproduct generation, lower energy/water consumption | [5] |

| Microreactors | Kolbe-Schmitt synthesis | Process safety & efficiency | Enabled operation under explosive conditions | [21] |

| Ultrasound | Biodiesel production | Enhanced mass transfer | Improved efficiency in extraction processes | [21] |

These quantitative benefits demonstrate the significant potential of PI to advance sustainability goals. The documented 38.33% energy savings in dimethyl carbonate production exemplifies how PI contributes directly to net-zero targets through reduced energy consumption [5]. Similarly, the transformation of batch processes to continuous operation reduces waste generation and resource consumption, supporting circular economy objectives by minimizing process inputs and outputs [5].

PI Experimental Protocols and Methodologies

Protocol 1: Continuous Flow Synthesis in Microreactors

Objective: Implement continuous flow chemistry to enhance reaction efficiency, safety, and sustainability compared to batch processing.

Materials:

- Chemtrix Flow Reactors (laboratory to industrial scale)

- Precision feed pumps (minimum 2 channels)

- Temperature-controlled reactor modules

- In-line analytical monitoring (e.g., FTIR, UV-Vis)

- Product collection system with back-pressure regulation

Methodology:

- Reactor Setup: Assemble microreactor system with appropriate reactor volume (μL to mL scale based on production requirements). Ensure all connections are secure and pressure-rated for intended operating conditions.

- Feed Preparation: Prepare reactant solutions at specified concentrations using sustainable solvents where possible. Filter solutions (0.45 μm) to prevent channel blockage.

- System Priming: Prime all fluidic pathways with solvent to remove air bubbles. Verify stable flow at target residence time.

- Process Optimization: Conduct residence time studies by varying flow rates while maintaining constant reactant ratio. Identify optimal temperature and pressure parameters through sequential experimentation.

- Continuous Operation: Initiate continuous operation at optimized conditions. Monitor pressure drop across reactor to detect potential clogging.

- Product Collection: Collect output stream, utilizing in-line separation where possible. Implement real-time analytical monitoring to ensure consistent product quality.

Sustainability Assessment:

- Quantify E-factor reduction compared to batch process

- Measure energy consumption per unit product

- Calculate solvent reduction through increased concentration or alternative solvents

Protocol 2: Reactive Distillation for Process Integration

Objective: Combine reaction and separation in a single unit operation to intensify chemical processes, reducing energy consumption and capital costs.

Materials:

- Pilot-scale distillation column with reactive zones

- Catalytic packing materials

- Temperature and pressure monitoring systems

- Reflux ratio control system

- Feed preheating system

Methodology:

- Column Packing: Install structured catalytic packing in reactive section of column. Use non-reactive packing in stripping and rectifying sections.

- System Validation: Conduct hydrodynamic testing to establish operational flow parameters. Verify temperature and composition profiles with non-reactive system.

- Catalyst Activation: Activate catalytic packing according to manufacturer specifications (typically thermal treatment under controlled atmosphere).

- Process Operation: Introduce reactant feeds through predetermined entry points. Establish steady-state operation with controlled reflux ratio.

- Parameter Optimization: Systematically vary feed ratio, reflux ratio, and boil-up rate to maximize conversion and selectivity.

- Continuous Monitoring: Track key performance indicators including conversion, selectivity, energy consumption, and product purity.

Sustainability Assessment:

- Compare energy consumption against conventional reactor-separator sequence

- Quantify reduction in equipment footprint and capital costs

- Calculate carbon footprint reduction per unit product

PI Implementation Pathways for Specific Sustainability Goals

Process Intensification contributes to specific United Nations Sustainable Development Goals (SDGs) through targeted technological applications, as visualized in Figure 2, which illustrates the interconnected pathways through which PI technologies address critical sustainability challenges.

Figure 2. PI Sustainability Pathways - Interconnected pathways through which PI technologies address UN Sustainable Development Goals.

Water Conservation (SDG 6)

The chemical and refinement industry accounts for approximately 50% of all water use in European manufacturing, with global water demand in manufacturing projected to increase by 400% over the next 25 years [5]. PI addresses this challenge through:

- Closed-loop water systems: Implementing membrane filtration and advanced water recycling technologies

- Process-integrated recovery: Designing processes that minimize fresh water intake through clever integration of water streams

- Contaminant reduction: Reducing pollutant loading in wastewater through improved process selectivity

Energy Efficiency (SDG 7)

PI contributes to affordable and clean energy through significant reductions in energy consumption and facilitation of renewable energy integration:

- Hybrid heat integration: Achieving documented energy savings of 38.33% in dimethyl carbonate production compared to conventional separation designs [5]

- Process simplification: Reducing energy requirements through decreased equipment counts and operational complexity

- Renewable integration: Enabling the use of renewable energy sources through flexible, intensified process designs

Climate Action (SDG 13)

PI technologies directly support climate action goals by transforming energy-intensive processes and reducing greenhouse gas emissions:

- Electrochemical reactors: Converting processes from fossil-fuel based to electrically driven, enabling renewable power integration

- Process miniaturization: Reducing the energy and material intensity of chemical production

- Emission reduction: Decreasing CO2 emissions through consolidated multi-step processes, as demonstrated by CoorsTek's ceramic membrane technology that eliminates CO2 emissions in gas-to-liquids conversion [5]

Research Reagent Solutions for PI Experimentation

Successful implementation of PI strategies requires specialized materials and reagents tailored to intensified process conditions. Table 2 outlines key research reagent solutions for PI experimentation in sustainable chemistry.

Table 2. Essential Research Reagents and Materials for PI Experimentation

| Reagent/Material | Function in PI Applications | Sustainability Benefit | Implementation Example |

|---|---|---|---|

| Structured Catalytic Packings | Enhanced mass transfer and reaction integration | Reduced energy consumption through process integration | Reactive distillation columns for esterification processes [21] |

| Ionic Liquids | Alternative solvent and catalyst media | Replacement of volatile organic compounds, recyclability | Multiphasic reaction systems with facile product separation [21] |

| Supercritical COâ‚‚ | Alternative reaction medium | Non-toxic, non-flammable substitute for organic solvents | Extraction and reaction medium in continuous flow systems [21] |

| Advanced Ceramic Membranes | High-temperature separation and reaction | Thermal stability enabling process intensification | CoorsTek's direct gas-to-liquids conversion [5] |

| Microreactor Coatings | Surface modification for specialized applications | Reduced fouling and maintenance requirements | Chemtrix flow reactors for pharmaceutical intermediates [5] |

These specialized materials enable researchers to overcome traditional process limitations and achieve the enhanced transport properties necessary for successful process intensification. The selection of appropriate reagents and materials is critical for realizing the sustainability benefits of PI approaches.

Industrial Applications and Case Studies

Chemical Industry Leaders in PI Implementation

Several companies have emerged as pioneers in implementing PI technologies at industrial scale, demonstrating the practical viability and sustainability benefits of these approaches:

Synthio Chemicals: Utilizes proprietary continuous-flow production platforms for rapid, safe production of challenging chemicals at scale, representing "chemistry for the new millennium" [5]

NiTech Solutions: Implements continuous baffled reactor and crystallization technology to deliver significant savings and limit harmful emissions across laboratory, pilot, and commercial scales [5]

Eastman Chemical Company: Demonstrated pioneering PI through methyl acetate production via reactive distillation, consolidating 11 conventional process steps into a single column with dramatically improved reliability and scalability [5]

These industrial implementations provide valuable case studies for researchers developing new PI applications, demonstrating both the technical feasibility and sustainability benefits of intensified processes.

Solids Handling Applications

While early PI applications focused primarily on fluid systems, significant advances have been made in intensifying solids handling operations, which present unique challenges including fouling and blockages in smaller equipment [22]. Key applications include:

Reactive crystallization and precipitation: Leveraging enhanced mixing capabilities in intensified technologies to produce uniformly distributed nanoparticles [22]

Continuous granulation and drying: Transforming traditional batch operations into continuous processes with reduced processing time and improved energy efficiency [22]

Integrated separation systems: Combining multiple solid processing operations into single units with reduced energy and material consumption

These applications demonstrate the expanding scope of PI across diverse process types, further enhancing its potential contribution to sustainability objectives.

Process Intensification represents a paradigm shift in chemical process design that directly addresses the sustainability imperative facing modern industry. Through the implementation of fundamental PI principles—maximizing molecular effectiveness, ensuring uniform process experiences, optimizing driving forces, and creating synergistic effects—researchers and industrial practitioners can dramatically advance progress toward net-zero emissions and circular economy targets.

The experimental protocols, quantitative benefits, and implementation frameworks presented provide researchers and drug development professionals with practical pathways for applying PI strategies in sustainable chemistry research. As global sustainability challenges intensify, PI offers a proven approach for reconciling industrial production with environmental stewardship through radically improved efficiency, waste reduction, and resource conservation.

Future research directions should focus on expanding PI applications to broader process domains, developing next-generation materials and equipment specifically designed for intensified operations, and creating integrated assessment methodologies that fully capture the sustainability benefits of PI approaches across entire product life cycles.

Implementing Intensified Processes: From Reactor Design to Biologics Production

Process Intensification (PI) represents a paradigm shift in chemical engineering, aimed at developing cleaner, safer, and more energy-efficient technologies. By designing innovative equipment and methods that dramatically shrink the plant footprint and boost efficiency, PI is central to advancing sustainable chemistry [6]. This article provides detailed application notes and experimental protocols for three core PI technologies—Reactive Distillation, Membrane Reactors, and Microreactors—framed within sustainable process development for researchers and drug development professionals.

Reactive Distillation

Application Notes

Reactive Distillation (RD) is a functional intensification technique that synergistically combines chemical reaction and separation in a single unit operation. This integration offers significant advantages for equilibrium-limited reactions, such as esterification, by continuously removing products to drive conversion beyond equilibrium constraints, thereby improving efficiency and reducing the number of process units required [6] [23]. A key industrial example is the synthesis of high-purity methyl acetate, which successfully replaced a complex conventional process involving multiple reactors and separation columns with a single RD column, significantly cutting capital costs and energy consumption [23].

The operational principle hinges on the interaction between reaction kinetics and vapor-liquid equilibrium. The concurrent reaction and separation of products lead to higher yields, utilization of reaction heat for separation (in exothermic reactions), and suppression of side reactions, resulting in superior selectivity [23]. Beyond methyl acetate, RD is commercially applied for etherification (e.g., MTBE, ETBE), hydrolysis, transesterification, and alkylation (e.g., cumene production) [23].

Table 1: Performance Data for Methanol Esterification via Reactive Distillation [24]

| Parameter | Traditional Start-up | Optimal Start-up | Change |

|---|---|---|---|

| Start-up Time | 12.5 hours | 4.5 hours | -64% |

| Global Warming Potential (GWP) | Baseline | -68% | -68% |

| Fossil Depletion | Baseline | -56% | -56% |

| Human Toxicity | Baseline | -69% | -69% |

Experimental Protocol: Optimal Start-up from Cold and Empty State

Application: Minimizing environmental impact and energy consumption during the start-up of a pilot-scale reactive distillation column for methanol esterification.

Principle: An optimized two-step policy manages the initial "discontinuous phase" (characterized by phase transitions) and the subsequent "continuous phase" to drastically reduce the time and resources required to reach steady-state operation [24].

Materials & Equipment:

- Pilot-scale RD Column: Equipped with a reboiler, reactive zone, and condenser.

- Reactants: Methanol and Acetic Acid.

- Catalyst: A strong acidic ion exchange resin (e.g., Amberlyst-15).

- Data Acquisition System: For monitoring temperature and composition profiles.

- Process Simulation Software: Such as DWSIM or Aspen Plus, for model validation and optimization [24].

Procedure:

- Initial Charging and Heating: Charge an equimolar mixture of methanol and acetic acid directly into the empty reboiler. Begin applying heat (Q̇_R) to the reboiler. The energy balance during this phase is described by Equation 1 [24]:

H_B * C_p * dT_B/dt + M_glass * C_p,glass * dT_B/dt = Q_RwhereH_Bis reboiler holdup,T_Bis bottom temperature, andM_glassandC_p,glassaccount for the heat capacity of the reboiler wall. - Vapor-Liquid Establishment: Continue heating until the mixture reaches its bubble point. Vapor ascends the column, condenses on the internal surfaces, and returns to the reboiler, gradually establishing vapor-liquid equilibrium (VLE) throughout the system.

- Continuous Operation Initiation: Once the column is sufficiently prepared (as determined by the optimized schedule), switch to continuous mode. Introduce the methanol and acetic acid feeds at their specified locations (e.g., bottom and top of the reactive zone, respectively) and simultaneously initiate product withdrawal from the top and bottom of the column.

Workflow Visualization:

Research Reagent Solutions

Table 2: Key Materials for Reactive Distillation Experiments

| Reagent/Material | Function | Example & Notes |

|---|---|---|

| Ion Exchange Resin | Solid acid catalyst to enhance reaction rate. | Amberlyst-15; used heterogeneously, simplifying separation and enabling reuse [23]. |

| Methanol & Acetic Acid | Reactants for model esterification reaction. | High-purity grades recommended to avoid catalyst poisoning and side reactions. |

| Structured Packing | Provides surface for reaction and mass transfer. | Sulzer Katapak-type packings are commercially used to hold catalyst and improve efficiency [23]. |

Membrane Reactors

Application Notes

Membrane reactors represent a synergistic intensification strategy by integrating a reaction zone with a selective membrane for in-situ separation. This continuous removal of a reaction product, such as hydrogen in reforming reactions or water in esterification, shifts chemical equilibrium toward higher product yields, allowing operations under milder conditions and reducing downstream separation costs [6]. Zeolite membranes, particularly the CHA type (e.g., SSZ-13, SAPO-34), are highly effective due to their uniform, molecular-sized pores that provide excellent shape-selectivity for separations like COâ‚‚ capture, natural gas purification, and dehydration of organic solvents [25].

A significant challenge for their commercial adoption has been the prolonged synthesis time and associated energy costs. Recent advances demonstrate that reactor miniaturization can drastically intensify the synthesis process itself. Using a small tubular reactor (ID: 4.0 mm), high-quality CHA membranes were synthesized in just 40 minutes, compared to the several hours or even days required by conventional hydrothermal methods [25]. These membranes demonstrated a high separation factor (αH₂O/2-PrOH of 1662) and a total flux of 2.97 kg/(m² h) for water separation from azeotropic mixtures, showcasing their potential for energy-saving separation technologies [25].

Experimental Protocol: Rapid Synthesis of a CHA Zeolite Membrane

Application: Energy- and time-efficient synthesis of a CHA-type zeolite membrane on a capillary support for molecular separation.

Principle: A significant reduction in reactor size drastically improves heat transfer, enabling very rapid and reproducible hydrothermal synthesis of a continuous, defect-free zeolite membrane layer via secondary growth [25].

Materials & Equipment:

- Capillary Support: α-Al₂O₃, OD: 2.5 mm, ID: 2.0 mm, mean pore size: 150 nm.

- Small Tubular Reactor: PTFE-lined, ID: 4.0 mm, OD: 6.0 mm, L: 135 mm.

- Precursor Chemicals: Colloidal silica (LUDOX AS-40), sodium aluminate, TMAdaOH (structure-directing agent).

- Seed Crystals: Pre-synthesized CHA zeolite powder.

- Oil Bath: Pre-heated to a stable 160°C.

Procedure:

- Support Seeding (Dip-Coating): Deposit CHA seed crystals onto the external surface of the capillary support by dip-coating. Withdraw the support from the seed solution at a constant rate (e.g., 5 mm/min). Dry the seeded support at room temperature or an elevated temperature (e.g., 200°C) [25].

- Reactor Assembly: Place the seeded capillary support inside the small tubular reactor. Fill the reactor with the precursor synthesis solution to approximately 70% of its volume [25].

- Rapid Hydrothermal Synthesis: Immerse the sealed tubular reactor in a pre-heated oil bath at 160°C for a synthesis time of 40 minutes. This intensified heating step promotes the rapid growth of a continuous membrane layer from the seed crystals [25].

- Membrane Characterization: After synthesis, cool the reactor, retrieve the membrane, and characterize it. A synthesis time of 40 minutes has been shown to produce membranes with a thickness of about 0.65 µm, good performance, and enhanced reproducibility by reducing defects [25].

Workflow Visualization:

Research Reagent Solutions

Table 3: Key Materials for CHA Membrane Synthesis

| Reagent/Material | Function | Example & Notes |

|---|---|---|

| Structure-Directing Agent (SDA) | Templates the formation of the CHA crystal structure. | N,N,N-Trimethyl-1-adamantammonium hydroxide (TMAdaOH); removed via calcination post-synthesis [25]. |

| Porous Capillary Support | Mechanical support for the thin zeolite layer. | α-Al₂O₃ capillary (2.5 mm OD); small diameter is crucial for intensified heat transfer [25]. |

| Seed Crystals | Pre-formed nanoscale crystals to promote uniform membrane growth. | Pre-synthesized CHA zeolite powder; quality of the seed layer critically impacts final membrane performance [25]. |

Microreactors

Application Notes

Microreactors achieve spatial intensification by confining chemical processes to channels with diameters typically less than 1 mm. This miniaturization leads to an enormous surface-to-volume ratio (up to 10,000 m²/m³), which enables exceptional control over reaction parameters and intensifies heat and mass transfer by orders of magnitude compared to conventional batch reactors [26] [6]. They are particularly advantageous for reactions that are highly exothermic, involve hazardous intermediates (e.g., in explosive regimes), or require precise kinetic studies [26].