Designing Safer Chemicals: Advanced Strategies for Toxicity Reduction in Biomedical Research

This article provides a comprehensive guide to the principles and practices of designing safer chemicals with reduced toxicity, tailored for researchers, scientists, and drug development professionals.

Designing Safer Chemicals: Advanced Strategies for Toxicity Reduction in Biomedical Research

Abstract

This article provides a comprehensive guide to the principles and practices of designing safer chemicals with reduced toxicity, tailored for researchers, scientists, and drug development professionals. It explores the foundational shift from post-market testing to proactive design, details cutting-edge methodological frameworks like Safe and Sustainable by Design (SSbD), and addresses key challenges in data gaps and alternative assessment. The content further covers rigorous validation through New Approach Methodologies (NAMs) and computational tools, synthesizing insights from regulatory science, industry case studies, and emerging AI technologies to inform a new paradigm in chemical innovation for biomedical applications.

The Paradigm Shift: Foundations of Proactive Toxicity Reduction

Inherently Safer Design (ISD) represents a fundamental philosophy in chemical process safety that focuses on eliminating or reducing hazards at their source rather than relying solely on added control systems. This preemptive approach to safety was first fully articulated by chemical engineer Trevor Kletz in 1977 through his seminal principle: "What you don't have, can't leak" [1]. This philosophy emerged in response to serious process industry incidents in the 1970s and has since evolved into a systematic framework for managing chemical hazards [1].

ISD is not a specific set of technologies but rather a mindset applied throughout a manufacturing plant's life cycle—from initial research and development through plant design, operation, and eventual decommissioning [1]. The concept recognizes that while perfect safety is unattainable, processes can be made inherently safer through deliberate design choices that permanently reduce or eliminate hazards [2].

Within the hierarchy of hazard control, ISD occupies the most effective position by addressing hazards fundamentally rather than through procedural controls or protective equipment [1]. This approach has gained recognition from regulatory bodies worldwide, including the US Nuclear Regulatory Commission and the UK Health and Safety Executive, which state that "Major accident hazards should be avoided or reduced at source through the application of principles of inherent safety" [2].

Core Principles of Inherently Safer Design

The implementation of inherent safety relies on four well-established principles that provide a systematic framework for hazard reduction. These principles, developed by Kletz and expanded by subsequent researchers, form the foundation of ISD practice [2]:

Minimize (Intensify)

The minimize principle involves reducing the quantity of hazardous materials present in a process at any given time. This can be achieved through process intensification using smaller reactors or equipment that maintains the same production rate with less inventory [2]. By reducing the amount of hazardous material, the potential consequence of an accidental release is proportionally diminished. This principle aligns with Kletz's original concept of "intensification," where chemical engineers understand this to involve "smaller equipment with the same product throughput" [2].

Substitute

Substitution focuses on replacing hazardous materials or processes with less hazardous alternatives. Examples include using water and detergent for cleaning instead of flammable solvents, or selecting less toxic catalysts in chemical reactions [2] [3]. The Tox-Scapes approach exemplifies this principle through its systematic evaluation of toxicity profiles for chemical reactions, enabling researchers to identify reaction pathways with the lowest toxicological impact [4].

Moderate (Attenuate)

Moderation involves using hazardous materials in less hazardous forms or under less hazardous conditions. This can include diluting concentrated solutions, using solvents at lower temperatures, or storing materials under conditions that reduce their inherent hazard [2]. Kletz originally referred to this principle as "attenuation," emphasizing the reduction of hazard strength rather than its complete elimination [2].

Simplify

Simplification aims to eliminate unnecessary complexity that could lead to operating errors or equipment failures. This includes designing processes that are inherently easier to control, eliminating unnecessary equipment, and making the status of equipment clear to operators [2]. Simplification reduces the likelihood of human error and makes correct operation the most straightforward path.

Table 1: Inherently Safer Design Principles and Applications

| Principle | Core Concept | Example Applications |

|---|---|---|

| Minimize | Reduce quantity of hazardous materials | Smaller reactor volumes; continuous processing instead of batch; process intensification |

| Substitute | Replace with less hazardous materials | Aqueous cleaning systems instead of organic solvents; less toxic catalysts [4] [5] |

| Moderate | Use less hazardous conditions | Diluted rather than concentrated acids; refrigeration of volatile materials; low-temperature processes |

| Simplify | Eliminate unnecessary complexity | Gravity-flow instead of pumped systems; elimination of unnecessary equipment; fail-safe designs |

Troubleshooting Guides: Implementing ISD in Research & Development

FAQ: Overcoming Common ISD Implementation Challenges

Q1: How can we justify the potentially higher initial costs of implementing ISD principles?

A comprehensive cost-benefit analysis should consider not only immediate capital costs but also long-term operational savings through reduced need for safety systems, lower insurance premiums, decreased regulatory burden, and avoided costs of potential incidents. ISD implementations often show significant return on investment through reduced engineering, maintenance, and operational costs over the facility lifecycle [6].

Q2: What if substituting a hazardous chemical reduces product efficacy?

The goal of ISD is to preserve efficacy while reducing toxicity, not to compromise function [7]. When direct substitution affects performance, consider alternative approaches such as process modification, different delivery mechanisms, or reformulation. The iterative Tox-Scapes methodology can help identify optimal combinations of catalysts, solvents, and reagents that balance performance with safety [4].

Q3: How can we apply ISD principles to existing facilities where major design changes are challenging?

ISD principles can be applied at any process life cycle stage, though opportunities are greatest during initial design [1]. For existing facilities, focus on operational changes like reducing inventory (minimize), using more dilute solutions (moderate), or simplifying procedures. A phased approach allows incremental implementation while maintaining operations [3].

Q4: How do we handle situations where ISD creates new, different hazards?

Complete hazard elimination is rare; the goal is net risk reduction. Conduct a comprehensive hazard analysis comparing original and modified processes. ISD requires informed decision-making that considers the full spectrum of hazards across the entire life cycle [1]. The Rapid Risk Analysis Based Design (RRABD) approach provides a framework for evaluating these tradeoffs [6].

Q5: What resources are available for quantifying inherent safety performance?

Several assessment tools exist, including the Dow Fire and Explosion Index which measures inherent danger [2]. Research institutions have developed specialized indices like Heikkilä's Inherent Safety Index and the Safety Weighted Hazard Index (SWeHI) for more specific applications [2].

Troubleshooting Technical ISD Implementation

Table 2: Troubleshooting Common ISD Implementation Challenges

| Challenge | Root Cause | ISD Solution Approach | Verification Method |

|---|---|---|---|

| Residual toxicity in alternative chemicals | Incomplete hazard assessment during substitution | Apply Tox-Scapes methodology for comprehensive toxicity profiling [4]; use tumor selectivity indices (tSIs) for refined chemical selection | Cytotoxicity testing (CC50) in human cell lines; computational toxicology modeling |

| Process instability after intensification | Inadequate understanding of scaled-down kinetics | Implement advanced process control systems; real-time monitoring; phased intensification with rigorous testing | Statistical process control charts; comparative risk assessment; performance validation at pilot scale |

| Unexpected interaction between substituted materials | Insufficient compatibility testing | Systematic compatibility screening using predictive methods; application of analytical tools like DSC and TGA | Accelerated rate calorimetry; reaction hazard analysis; small-scale compatibility testing |

| Increased energy consumption with safer alternatives | Narrow problem definition that doesn't consider full life cycle | Holistic assessment including environmental impact; energy integration techniques; circular economy principles | Life cycle assessment (LCA); energy efficiency metrics; sustainability indices |

| Operator resistance to simplified but unfamiliar processes | Inadequate training and engagement during transition | Participatory design approach; clear communication of safety benefits; phased implementation with comprehensive training | Procedure compliance audits; near-miss reporting rates; safety culture surveys |

Experimental Protocols for Safer Chemical Design

Protocol: Tox-Scapes Methodology for Reaction Toxicity Assessment

The Tox-Scapes approach provides a systematic framework for evaluating the toxicological impact of chemical reactions, enabling researchers to identify pathways with the lowest hazardous footprint [4].

Materials and Equipment

- Human cell lines (appropriate for expected exposure pathways)

- Cell culture facilities and consumables

- Test compounds (catalysts, solvents, reagents)

- Microplate reader for cytotoxicity assessment

- Analytical instrumentation (HPLC, GC-MS) for compound verification

- Computational resources for data analysis and visualization

Procedure

Sample Preparation: Prepare standardized solutions of all reaction components (catalysts, solvents, reagents, and predicted reaction products) at concentrations relevant to the proposed process.

Cytotoxicity Testing (CC50 Determination):

- Expose human cell lines to serial dilutions of each test compound

- Incubate for predetermined exposure periods (typically 24-72 hours)

- Measure cell viability using standardized assays (MTT, XTT, or similar)

- Calculate half-maximal cytotoxic concentration (CC50) for each compound

Toxicity Profiling:

- Compile CC50 values for all reaction components

- Apply weighting factors based on relative quantities used in the process

- Calculate overall toxicity score for each reaction pathway

Pathway Evaluation:

- Screen multiple reaction routes (e.g., 864 routes as in original study [4])

- Compare overall toxicity scores across different pathways

- Identify critical toxicity drivers within each pathway

Optimization and Selection:

- Select catalysts, solvents, and reagents that minimize overall toxicity

- Apply tumor selectivity indices (tSIs) where appropriate to enhance selective toxicity profiles

- Verify performance and synthetic feasibility of leading candidates

Data Analysis and Interpretation

The Tox-Scapes methodology generates visual, quantitative maps of reaction toxicity that enable direct comparison of alternative pathways. This approach successfully identified tetrahydrofuran as a lower-toxicity solvent and specific catalysts that contributed significantly to overall toxicity in the Buchwald-Hartwig amination reaction [4].

Protocol: Rapid Risk Analysis Based Design (RRABD)

The RRABD approach integrates inherent safety principles with efficient risk assessment during early process design stages [6].

Procedure

Scenario Identification: Develop credible accident scenarios using structured approaches (HAZOP, FMEA, or What-If analysis)

Rapid Risk Assessment:

- Apply simplified quantitative risk assessment methods

- Focus on consequence analysis and frequency estimation for dominant risk scenarios

- Use historical incident data and predictive models

ISD Option Generation:

- Brainstorm design alternatives applying each ISD principle (Minimize, Substitute, Moderate, Simplify)

- Develop multiple design options with varying levels of inherent safety

Comparative Evaluation:

- Assess options using technical and economic criteria

- Evaluate risk reduction potential versus implementation cost

- Select optimal design balancing safety and economic considerations

Iterative Refinement:

- Refine selected design through additional risk analysis cycles

- Document inherent safety features and residual risks

Application Example

In a case study evaluating propylene and propylene oxide storage systems, the RRABD approach identified Option 4 as optimal, effectively balancing risk reduction with cost considerations [6]. This method demonstrated 45% time savings compared to traditional risk analysis approaches while maintaining assessment accuracy [6].

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Research Reagents for Safer Chemical Design

| Reagent Category | Specific Examples | Function in Safer Design | Safety & Environmental Profile |

|---|---|---|---|

| Green Solvents | Tetrahydrofuran, water, supercritical COâ‚‚ | Lower toxicity alternatives to halogenated and aromatic solvents; minimized environmental persistence [4] [5] | Reduced bioaccumulation potential; lower volatility; minimized toxicity in aquatic and terrestrial environments |

| Alternative Catalysts | Palladium, nickel, iron complexes | Reduced toxicity while maintaining catalytic efficiency; enable milder reaction conditions [4] | Lower heavy metal content; reduced environmental mobility; improved selectivity minimizing byproducts |

| Bio-Based Feedstocks | Plant-derived alcohols, acids, polymers | Renewable resources with potentially lower toxicity profiles; reduced fossil fuel dependence | Enhanced biodegradability; lower carbon footprint; reduced ecosystem toxicity |

| Safer Surfactants | Sugar-based, amino acid-derived surfactants | Replacement of fluorosurfactants in applications like firefighting foams [7] [5] | Reduced environmental persistence; lower bioaccumulation potential; minimized toxicity to aquatic organisms |

| Inherently Safer Reagents | Solid-supported reagents, diluted acids | Reduced hazard through physical form or concentration; minimized storage and handling risks | Lower volatility; reduced corrosion potential; minimized reaction runaway risks |

| Ammonia-d3 | Ammonia-d3 | Deuterated Reagent | CAS 13550-49-7 | Ammonia-d3 (ND3), a stable isotope-labeled reagent for NMR spectroscopy & metabolic research. For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

| 4-Chloro-1-indanone | 4-Chloro-1-indanone|CAS 15115-59-0|≥95% Purity | Bench Chemicals |

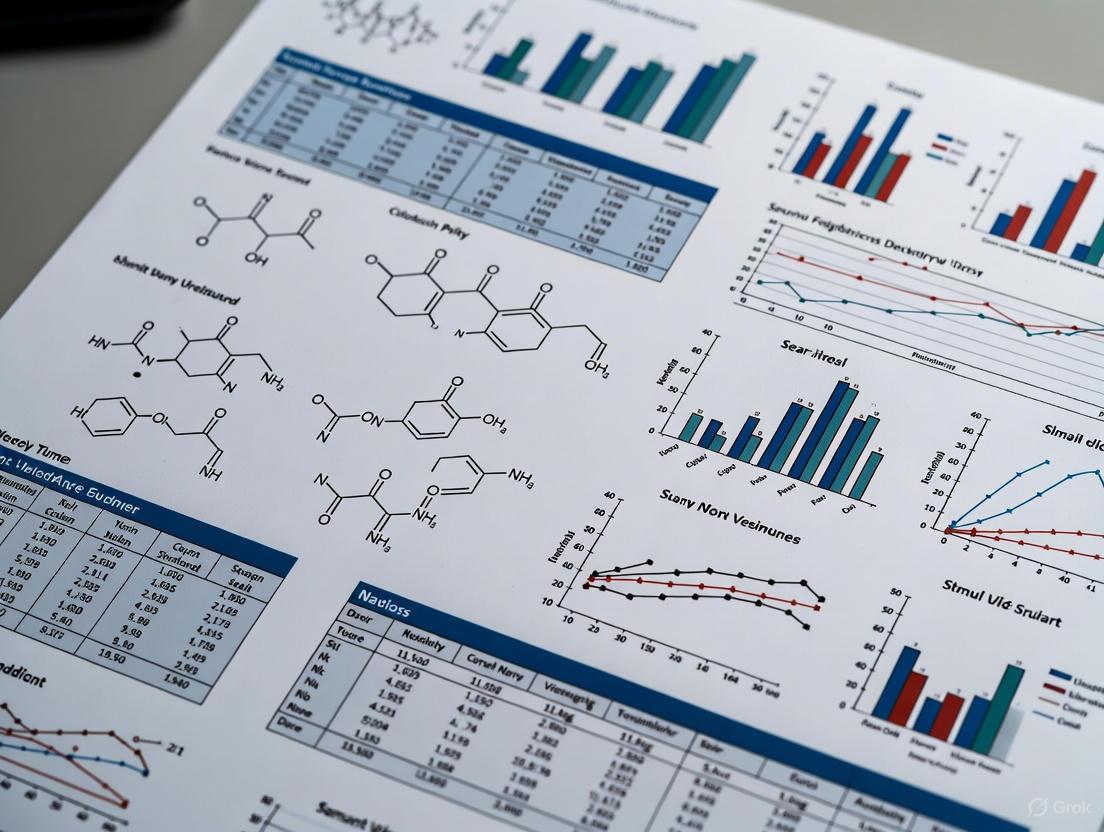

Workflow Visualization: Implementing ISD in Research

Inherent Safety Design Implementation Workflow

Tox-Scapes Methodology for Reaction Assessment

Welcome to this technical support center, designed to assist researchers, scientists, and drug development professionals in integrating key modern drivers—regulatory trends, green chemistry principles, and planetary boundaries—into their toxicity reduction research and safer chemical design. This resource provides practical, actionable guidance in a question-and-answer format to help you troubleshoot specific challenges encountered in the lab. By framing experimental protocols within this broader context, we aim to support the development of next-generation chemicals and pharmaceuticals that are inherently safer for human health and the global ecosystem.

Regulatory Trends: FAQs on Navigating the Compliance Landscape

Q1: What are the most pressing U.S. regulatory changes in 2025 affecting chemical risk assessment and management?

Recent developments from the U.S. Environmental Protection Agency (EPA) highlight several key trends that researchers must monitor, as they directly influence chemical prioritization and risk evaluation.

- Ongoing Scrutiny of Organophosphate Pesticides: A writ of mandamus was filed against the EPA in June 2025 to compel the agency to respond to a 2021 petition to revoke food tolerances and cancel registrations for organophosphate pesticides. This legal pressure signifies a continued high-risk regulatory environment for this chemical class [8].

- New Significant New Use Rules (SNURs): The EPA issued final SNURs for certain chemical substances in July 2025. These rules require persons who intend to manufacture (including import) or process these chemicals for a significant new use to notify the EPA at least 90 days before commencing that activity. Compliance is critical for ongoing research and development involving these substances [8].

- Risk Management Rule Reconsideration: The EPA is reconsidering the recently promulgated risk management rule for perchloroethylene (PCE). The agency is seeking public comment, indicating potential shifts in how unreasonable risks from well-known solvents will be managed, which could serve as a model for other substances [8].

- Focus on Safer Chemical Ingredients: The EPA added 18 chemicals to its Safer Chemical Ingredients List (SCIL) in July 2025, supporting a commitment to "transparency, innovation and safer chemistry." This list is a valuable resource for researchers seeking pre-evaluated, safer alternatives in product formulations [8].

Q2: How does the "Safe and Sustainable-by-Design (SSbD)" framework from Europe influence global R&D?

The European Commission's SSbD framework is a pre-market approach that is reshaping chemical innovation globally, urging a fundamental rethink of R&D processes [9]. It requires a lifecycle perspective, assessing environmental and human impacts at every stage of chemical development and usage. The recommended criteria involve a two-phase approach:

- Phase 1 - Design Principles: This focuses on integrating:

- Phase 2 - Holistic Assessment: This step involves a thorough assessment of material hazards, human health and safety effects in the processing and use phases, lifecycle impacts, and social and economic sustainability [9].

Troubleshooting Tip: If your novel chemical entity is flagged for having a complex and hazardous synthesis pathway, revisit the SSbD design principles. Can a bio-based or waste feedstock replace a petroleum-based one? Can the molecule be designed for easier degradation at end-of-life without losing functionality?

Green Chemistry Principles: FAQs for Laboratory Implementation

Q3: Our drug discovery team operates under tight deadlines. Why should green chemistry be a priority in early-stage research?

In early-stage research, the mentality that "somebody else will 'fix' the synthesis" later is a major hurdle. However, green syntheses start with the R&D scientist [10]. Embedding green chemistry principles from the beginning provides fertile ground for sustainable innovation and avoids costly, time-intensive re-engineering of routes when scaling up. A four-point plan, REAP, can help incentivize this integration [10]:

- Reward: Establish internal awards and recognition for achievements in green chemistry, including in early-stage research [10].

- Educate: Provide training on sustainability objectives and green chemistry metrics to benchmark chemistries as they are developed, helping to close the generational awareness gap [10].

- Align: Clearly show scientists how their application of green chemistry principles aligns with the organization's broader corporate sustainability goals, connecting individual lab work to larger impacts [10].

- Partner: Encourage scientists to network internally with EHS and supply chain groups, and externally through pre-competitive consortia like the ACS GCI Pharmaceutical Roundtable [10].

Q4: What are practical, quantitative metrics we can use to assess "greenness" in our synthetic routes?

Beyond yield and purity, tracking specific metrics provides a quantitative basis for comparing and improving synthetic routes. The following table summarizes key metrics to calculate for your experimental protocols.

Table: Key Green Chemistry Metrics for Experimental Assessment

| Metric | Formula / Description | Interpretation & Goal |

|---|---|---|

| Process Mass Intensity (PMI) | Total mass of materials used in process (kg) / Mass of product (kg) | Lower is better. Measures total resource consumption [10]. |

| E-Factor | Total mass of waste (kg) / Mass of product (kg) | Lower is better. A classic metric of process waste [10]. |

| Solvent Recovery Rate | (Mass of solvent recycled (kg) / Mass of solvent input (kg)) x 100% | Higher is better. Tracks circularity and waste reduction in your lab, as demonstrated by companies like Everlight Chemical [11]. |

| Atom Economy | (Molecular Weight of Desired Product / Σ Molecular Weights of All Reactants) x 100% | Higher is better. Theoretical measure of efficiency; aims to incorporate most reactant atoms into the final product. |

Troubleshooting Tip: If your E-Factor is unacceptably high, focus on solvent selection and recovery. Can a less hazardous solvent be used? Can your reaction solvent be efficiently purified and reused for the same reaction, as practiced in leading industrial labs? [11]

Planetary Boundaries: FAQs for Contextualizing Research Impact

Q5: What are "planetary boundaries," and how do they relate to the work of a medicinal chemist?

The planetary boundaries framework defines a "safe operating space for humanity" by quantifying the limits of nine critical Earth system processes. Transgressing these boundaries increases the risk of generating large-scale, abrupt, or irreversible environmental changes [12]. For a chemist, this framework places your work in a macro-scale context. Evidence suggests that the planetary boundary for "novel entities"—including synthetic chemicals and plastics—has already been transgressed, pushing this process into a high-risk zone [12]. Your research directly impacts this boundary by determining whether new chemical entities introduced into commerce are benign or contribute further to environmental loading and ecosystem stress.

Q6: Our research deals with micrograms of a new compound. How can such small quantities pose a planetary risk?

The risk is not from a single experiment but from the aggregate and cumulative impact of many chemicals across their global lifecycle. A drug may be produced in metric tons annually, and its molecular structure determines its persistence, bioaccumulation potential, and toxicity (PBT properties) in the environment. Research priorities should align with the knowledge of toxicological modes of action; chemicals that target phylogenetically-conserved physiological processes (e.g., lead interfering with chlorophyll and hemoglobin synthesis) can have broad impacts on biodiversity [13]. Therefore, even at the microgram scale in the lab, assessing these fundamental properties is a critical responsibility.

Table: Status of Key Planetary Boundaries Relevant to Chemical Design (2025 Update) [12]

| Planetary Boundary | Status | Key Concern for Chemical Researchers |

|---|---|---|

| Novel Entities | Transgressed (High-risk) | Synthetic chemicals, plastics, and other new materials introduced without adequate safety testing. |

| Climate Change | Transgressed | Greenhouse gas emissions from energy-intensive synthesis and manufacturing. |

| Biosphere Integrity | Transgressed | Chemical pollution contributing to biodiversity loss and ecosystem dysfunction. |

| Freshwater Change | Transgressed | Pollution of water systems with toxic chemicals, nutrients, and recalcitrant materials. |

| Ocean Acidification | Transgressed (New in 2025) | Driven by CO2 absorption, but chemical pollution exacerbates stress on marine life. |

| Stratospheric Ozone Depletion | Safe | A success story, showing regulation works. Phasing out of ODS is a model for other boundaries. |

Integrated Experimental Protocols

Protocol 1: Toxicity Identification Evaluation (TIE) for Aqueous Effluent or Reaction Mixtures

Objective: To identify the specific chemical(s) causing toxicity in an aqueous sample, a critical step in designing safer chemicals and processes.

Workflow Overview:

Detailed Methodology:

Phase I: Toxicity Characterization (Physical/Chemical Manipulations) [14]

- Step A: Graduated pH Adjustment: Test toxicity at pH 3, 6, and 9. A change in toxicity suggests a pH-dependent toxicant (e.g., ammonia).

- Step B: Filtration and Aeration: Pass sample through a 1µm filter and/or aerate with air for 1 hour. Reduction in toxicity may indicate a particulate or volatile toxicant.

- Step C: Solid Phase Extraction (SPE): Use C18 columns to separate organics from inorganics. Elute with methanol and test both fractions for toxicity.

- Toxicity Testing: After each manipulation, test for acute or chronic toxicity using standardized organisms like Ceriodaphnia dubia (water flea) or Pimephales promelas (fathead minnow) [15].

Phase II: Toxicity Identification (Analytical Methods) [14]

- Step A: High-Resolution Analysis: Subject the toxic fraction from Phase I to techniques like GC-MS, LC-MS, or ICP-MS to identify candidate toxicants.

- Step B: Fractionation & Testing: Use preparatory chromatography to separate the toxic extract into sub-fractions. Test each sub-fraction for toxicity to isolate the specific causative agent.

Phase III: Toxicity Confirmation [14]

- Spiking Experiments: Add the suspected pure toxicant back into a non-toxic sample (or a control water) at the concentration measured in the original effluent. The reappearance of toxicity confirms the identity of the toxicant.

- Mass Balance Analysis: Demonstrate that the toxicity of the original sample can be accounted for by the concentration and known toxicity of the confirmed toxicant.

Protocol 2: Integrating Green Chemistry and Planetary Boundary Principles in Molecule Design

Objective: To establish a pre-screening workflow for new chemical entities that evaluates both molecular-level hazards and global environmental impacts.

Workflow Overview:

Detailed Methodology:

Step 1: In-silico Hazard Screening

- PBT Profiling: Use software tools to predict Persistence (e.g., biodegradation half-life), Bioaccumulation (e.g., log Kow), and Toxicity (e.g., fish, Daphnia, algal toxicity). Flag molecules with high PBT scores.

- Structural Alerts: Screen for known toxicophores and structural features associated with carcinogenicity, mutagenicity, or reproductive toxicity (e.g., aryl bromides, certain amines).

Step 2: Assess Synthetic Route Using Green Chemistry Principles

- Atom Economy Calculation: Calculate the atom economy for the proposed route. Prioritize routes with higher atom economy to minimize waste generation at the molecular level [10].

- Solvent & Reagent Selection Guide:

- Prefer: Water, ethanol, 2-propanol, acetone, ethyl acetate.

- Avoid: Chlorinated solvents (e.g., DCM, chloroform), ethereal solvents (e.g., diethyl ether, THF without stabilizer), and other high-hazard substances (e.g., DMF, NMP) where possible.

- Energy Assessment: Evaluate if the reaction requires prolonged heating, cryogenic conditions, or high pressure. Seek milder alternatives to reduce energy footprint, a key factor in the Climate Change planetary boundary [12].

Step 3: Planetary Boundary Impact Check

- Feedstock Origin: Is the starting material derived from fossil fuels (transgressing Climate Change boundary) or from a renewable, bio-based source (e.g., microalgae, agricultural waste) [9]?

- End-of-Life Fate: Is the molecule readily biodegradable? Does it have the potential to break down into harmless substances, or could it persist as a "novel entity" [13]? Does its synthesis or use mobilize heavy metals or nutrients (e.g., N, P) that could exacerbate the "Biogeochemical Flows" boundary [12]?

Step 4: Design Iteration

- Use the information from Steps 1-3 to iteratively redesign the molecule or its synthesis. For example, introduce ester linkages to enhance biodegradability or select a synthetic route that avoids stoichiometric heavy metal reagents.

The Scientist's Toolkit: Essential Research Reagents & Solutions

This table details key materials and concepts used in the fields of toxicity reduction and sustainable chemical design.

Table: Essential Toolkit for Safer Chemical Design Research

| Item or Concept | Function / Description | Relevance to Key Drivers |

|---|---|---|

| C18 Solid Phase Extraction (SPE) Cartridges | Used in TIE Protocol Phase I to separate organic contaminants from inorganic matrix in aqueous samples. | Regulatory: Critical for identifying toxicants in effluent compliance testing [14]. |

| Ceriodaphnia dubia (Water Flea) | A standard freshwater crustacean used in acute and chronic toxicity bioassays. | Regulatory: A key test species for Whole Effluent Toxicity (WET) permits [15]. |

| Safer Chemical Ingredients List (SCIL) | An EPA list of chemicals evaluated and determined to meet Safer Choice criteria. | Green Chemistry: A trusted resource for finding safer alternative chemicals in formulations [8]. |

| REAP Framework | A 4-point system (Reward, Educate, Align, Partner) to incentivize green chemistry in industrial research. | Green Chemistry: A practical management tool for embedding sustainability in R&D culture [10]. |

| Planetary Boundaries Framework | A science-based framework defining the environmental limits within which humanity can safely operate. | Planetary Boundaries: Provides the macro-scale context for assessing the environmental impact of novel chemicals [12] [13]. |

| Safe and Sustainable-by-Design (SSbD) | A pre-market EU framework for assessing chemicals based on safety and sustainability throughout their lifecycle. | All Drivers: Integrates regulatory foresight, green chemistry, and planetary health into a single approach [9]. |

| Bio-based Feedstocks (e.g., microalgae) | Renewable materials from living organisms used as alternatives to fossil fuel-derived feedstocks. | Planetary Boundaries/Green Chem: Reduces reliance on fossil fuels, promotes circularity, and can have a lower carbon footprint [9]. |

| Tin dihydroxide | Tin dihydroxide, CAS:12026-24-3, MF:H2O2Sn, MW:186.74 g/mol | Chemical Reagent |

| 1-Methylphenazine | 1-Methylphenazine|CAS 1016-59-7|For Research | 1-Methylphenazine is a phenazine derivative for research use only (RUO). Explore its applications in chemical and biochemical studies. Not for human or veterinary use. |

Frequently Asked Questions (FAQs)

What defines a PBT substance and why is it a major concern for long-term environmental health?

PBT stands for Persistent, Bioaccumulative, and Toxic. This class of compounds poses a significant threat due to its unique combination of properties [16]:

- Persistence: These substances have a high resistance to degradation from both abiotic (e.g., sunlight) and biotic (e.g., bacteria) factors, allowing them to remain in the environment for long periods and travel far from their original source [16] [17].

- Bioaccumulation: PBTs accumulate in living organisms at a rate faster than they are eliminated, often because they are fat-soluble. This leads to increasingly high concentrations in body tissues over time [16] [17].

- Toxicity: They are highly toxic, capable of causing adverse health effects—such as cancer, neurological damage, or developmental disorders—even at very low concentrations [16] [17].

The major concern is that due to their persistence and ability to biomagnify up the food chain, their harmful impacts can persist for many years even after production has ceased, making exposure difficult to reverse [17].

How does the Globally Harmonized System (GHS) classify carcinogens, and what are the key differences between Category 1 and 2?

The GHS provides a standardized approach to classifying chemical hazards. For carcinogenicity, it uses the following categories [18]:

- Category 1: Known or presumed human carcinogens.

- Category 1A: Known to have carcinogenic potential for humans, based largely on human evidence.

- Category 1B: Presumed to have carcinogenic potential for humans, based largely on animal evidence.

- Category 2: Suspected human carcinogens, where evidence is less convincing than for Category 1.

This classification system helps ensure that information about chemical hazards is consistently communicated to users worldwide through labels and safety data sheets [18] [19].

What are the primary mechanisms of action for Endocrine-Disrupting Chemicals (EDCs)?

EDCs interfere with the normal function of the endocrine system through diverse mechanisms [20]:

- They can mimic natural hormones (like estrogens or androgens), triggering an inappropriate response.

- They can block hormone receptors, preventing natural hormones from acting.

- They can interfere with the synthesis, transport, metabolism, or elimination of hormones, thereby altering their concentrations in the body.

Initially, it was thought EDCs acted mainly through nuclear hormone receptors. It is now understood that their mechanisms are much broader and can include non-steroid receptors and enzymatic pathways involved in steroid biosynthesis [20].

What are the key regulatory frameworks and occupational exposure limits for hazardous chemicals in the workplace?

Worker safety is governed by standards that set limits on airborne concentrations of hazardous chemicals. The most common types of Occupational Exposure Limits (OELs) are [19]:

- PEL (Permissible Exposure Limit): Legally enforceable limits set by OSHA.

- REL (Recommended Exposure Limit): Non-enforceable, health-based recommendations from NIOSH.

- TLV (Threshold Limit Value): Health-based guidelines developed by ACGIH, a non-governmental organization.

It is OSHA's longstanding policy that engineering and work practice controls are the primary means to reduce employee exposure, with respiratory protection used when these controls are not feasible [19] [21].

What is the rationale behind the EPA's expedited action on specific PBT chemicals under TSCA?

Section 6(h) of the Toxic Substances Control Act (TSCA) requires the EPA to take expedited action on certain PBT chemicals. The rationale is that these chemicals accumulate in the environment over time and can pose significant risks to exposed populations—including the general population, workers, and susceptible subpopulations—even at low levels of exposure. Because of their hazardous nature, the law requires the EPA to address risk and reduce exposure to these chemicals to the extent practicable without first requiring a full risk evaluation [22].

Table 1: Common Classes of Hazardous Chemicals and Their Key Properties

| Chemical Class | Persistence | Bioaccumulation Potential | Key Toxic Effects | Common Examples |

|---|---|---|---|---|

| PBTs [16] [17] | High resistance to environmental degradation | High; accumulates in tissue | Neurotoxicity, developmental disorders, cancer | PCBs, PBDEs, Mercury, DDT |

| Endocrine Disruptors [23] [20] | Varies (some are very persistent) | Varies (many are bioaccumulative) | Reproductive disorders, cancer, metabolic issues | BPA, Phthalates, Dioxins, Atrazine |

| Carcinogens (GHS Cat 1A/1B) [18] | Not a defining property | Not a defining property | Cancer initiation or promotion | Asbestos, Benzene, Formaldehyde |

Troubleshooting Guides

Problem 1: Identifying and Substituting a Suspected PBT in a Product Formulation

Solution: Follow this systematic assessment and substitution workflow.

Assessment Protocol:

- Persistence Screening: Determine the environmental half-life of the chemical in water, soil, and sediment. Chemicals with half-lives exceeding 40 days in water or 180 days in soil/sediment are generally considered persistent [22].

- Bioaccumulation Screening: Calculate the octanol-water partition coefficient (Kow). A Kow > 1000 indicates a potential to bioaccumulate, while a Kow > 5000 indicates a high potential [17].

- Toxicity Evaluation: Review existing scientific literature for evidence of chronic toxicity, such as developmental, neurological, or immunological effects. Utilize high-throughput in vitro assays to identify potential endocrine-disrupting or other toxic properties [16] [23].

Problem 2: Designing an Experimental Workflow to Screen for Endocrine Disruption

Solution: Implement a tiered testing strategy that progresses from high-throughput in silico and in vitro assays to more complex in vivo studies.

Detailed Methodologies:

- In Silico Modeling (Tier 1):

- Use Quantitative Structure-Activity Relationship (QSAR) models to predict binding affinity to key hormone receptors like estrogen (ER), androgen (AR), and thyroid (TR) receptors.

- Perform molecular docking simulations to visualize the potential interaction between the chemical and the ligand-binding domain of the receptor [24].

- In Vitro Assays (Tiers 1 & 2):

- Receptor Binding Assays: Measure the ability of a test chemical to compete with a radiolabeled natural hormone for binding to its receptor (e.g., ER, AR).

- Cell-Based Reporter Gene Assays: Use engineered cell lines containing a hormone-responsive element linked to a reporter gene (e.g., luciferase). Activation of the receptor by a test chemical leads to a quantifiable signal, indicating agonist or antagonist activity [23] [20].

- In Vivo Testing (Tier 3):

- Conduct guideline studies such as the OECD Pubertal Assay in rats, which assesses effects on thyroid function, estrous cyclicity, and sexual maturation after pre- and post-natal exposure. These tests are crucial for confirming disruption of the endocrine system in a whole organism [20].

Problem 3: Interpreting Carcinogenicity Data for GHS Classification

Solution: Apply a structured weight-of-evidence approach following the GHS guidance to determine the appropriate category.

Decision Framework:

- Evaluate Study Quality and Relevance:

- Assess for a Substance-Related Response:

- Analyze Human Relevance:

- Consider Potency: While not a classification criterion, the potency of the effect (e.g., the TD50) is critical for risk assessment and priority setting [18].

Table 2: Key Characteristics of Major Hazardous Chemical Classes for Safer Design

| Chemical Class | Key Safer Design Objective | Promising Alternative | Critical Testing Endpoints |

|---|---|---|---|

| PBTs [16] [24] [22] | Introduce readily degradable functional groups (e.g., esters); reduce halogenation | Non-halogenated flame retardants; Green solvents | Biodegradation half-life; BCF/BAF; Chronic toxicity |

| Endocrine Disruptors [23] [20] | Avoid structural similarity to endogenous hormones (estradiol, testosterone, T3/T4) | Alternatives with no receptor affinity; Reactive vs. additive plasticizers | In vitro receptor activation; In vivo developmental and reproductive studies |

| Carcinogens [18] [19] | Eliminate structural alerts for genotoxicity (e.g., certain epoxides, aromatic amines) | Less reactive processing aids; Closed-system manufacturing | In vitro mutagenicity (Ames test); In vivo carcinogenicity bioassays |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Hazard Assessment in Safer Chemical Design

| Tool / Reagent | Function | Application in Research |

|---|---|---|

| QSAR Software [24] | Predicts chemical properties and biological activity based on molecular structure. | Early-stage virtual screening for persistence, bioaccumulation potential, and toxicity. |

| Tox21/ToxCast Assays [23] | A battery of high-throughput in vitro assays screening chemicals across a wide range of biological pathways. | Rapid, cost-effective prioritization of chemicals with potential endocrine activity or other toxicity. |

| Specific Hormone Receptor Kits (ER, AR, TR) [20] | Commercially available kits for measuring ligand-receptor binding and transcriptional activation. | Mechanistic testing to confirm and characterize endocrine-disrupting activity. |

| OECD Test Guidelines | Internationally agreed standardized testing methods for chemical safety assessment. | Conducting reliable and reproducible studies for regulatory acceptance (e.g., biodegradation, bioconcentration, chronic toxicity). |

| Analytical Standards (for PCBs, PBDEs, PFAS, etc.) [16] [17] | High-purity reference materials for quantifying chemical concentrations. | Accurate measurement of test substance and its metabolites in environmental or biological samples. |

| Lithium acrylate | Lithium acrylate, CAS:13270-28-5, MF:C3H3LiO2, MW:78 g/mol | Chemical Reagent |

| Cobalt(2+) selenate | Cobalt(2+) selenate, CAS:14590-19-3, MF:CoO4Se, MW:201.9 g/mol | Chemical Reagent |

Frequently Asked Questions: Integrating Lifecycle Thinking into R&D

Q1: How can I practically assess a new chemical's environmental footprint during early-stage R&D? Early assessment is feasible by integrating Lifecycle Assessment (LCA) principles and predictive digital tools into your workflow. During molecular design, use computer-aided molecular design (CAMD) frameworks and AI-driven models to predict key environmental endpoints, such as toxicity, biodegradability, and aquatic toxicity [25] [26]. Furthermore, define and track sustainability Key Performance Indicators (KPIs) like Process Mass Intensity (PMI) or E-factor (mass waste per mass product) from the outset. Embedding these metrics into your project stage-gates ensures sustainability is a critical parameter for decision-making, alongside performance and cost [25] [27].

Q2: We found a bio-based feedstock. What are the common performance trade-offs we should anticipate? While bio-based feedstocks reduce fossil carbon dependency, common trade-offs can include:

- Performance Variability: Renewable feedstocks can introduce compositional variability, potentially affecting process consistency and final product properties like tensile strength or thermal stability [25] [27].

- Purity and Processing: They may require more extensive processing or purification to achieve the purity levels of petrochemical-derived equivalents [27].

- Functional Properties: A biobased monomer might compromise mechanical properties, shelf life, or reactivity, necessitating reformulation [25]. Strategy: Leverage digital twins to simulate the impact of feedstock variability and AI models to suggest alternative process routes or complementary additives that can help balance these trade-offs before lab synthesis [25].

Q3: Our new, less toxic molecule is not biodegradable. Is this a failure from a lifecycle perspective? Not necessarily, but it requires a broader lifecycle analysis. The goal is to reduce overall harm. A less toxic but persistent chemical might be preferable if it replaces a highly toxic and persistent one, especially if its use phase is contained, and it can be effectively recovered and recycled [25] [28]. The key is to evaluate the trade-offs across the entire lifecycle.

- Assess the application: For a closed-loop industrial process, non-biodegradability may pose a lower risk. For a consumer product that will enter wastewater, it is a significant concern.

- Consider the circular economy: Design the molecule and its application system for recovery and recycling (technical cycle) if it cannot safely re-enter the environment (biological cycle) [28].

Q4: What is the simplest first step my lab can take to reduce toxicity at the design stage? Implement a mandatory solvent substitution guide as part of your experimental design protocol. Use a structured system (e.g., a traffic-light ranked list) to steer chemists away from hazardous solvents like chlorinated aromatics and toward safer alternatives such as water, bio-based solvents, or ionic liquids [27] [29]. This single step directly applies the principles of Less Hazardous Chemical Syntheses and Safer Solvents and Auxiliaries [27].

Troubleshooting Common Experimental Challenges

Problem: Designing for Lower Toxinity Compromises Molecular Efficacy

Issue: A newly designed surfactant with improved toxicity profile shows a significantly higher Critical Micelle Concentration (CMC), reducing its effectiveness.

| Investigation Step | Action & Methodology | Key Reagents & Tools |

|---|---|---|

| 1. Structure-Property Analysis | Use Computer-Aided Molecular Design (CAMD) to model the relationship between molecular structure (e.g., tail length, head group size) and CMC. Apply Quantitative Structure-Property Relationship (QSPR) models [26]. | CAMD Software, QSPR Model Datasets, Group Contribution Methods (GCMs) or GC_ML hybrid models [26]. |

| 2. Head/Tail Group Optimization | Deconstruct the molecule. Run a multi-objective optimization to generate candidate head and tail groups that balance low CMC with low toxicity. Recombine the most promising candidates [26]. | Predictive AI models for CMC and toxicity; Molecular graph generation tools [25] [26]. |

| 3. Formulation Adjustment | Test if the performance shortfall can be overcome by blending the new surfactant with a small amount of a safe, high-performance co-surfactant, rather than using a pure compound [26]. | Library of green co-surfactants (e.g., biosurfactants); Surface tensiometer for CMC validation. |

Problem: Inconsistent Results with Renewable Feedstocks

Issue: A synthesis pathway using an agricultural waste-derived feedstock produces variable yields and impurity profiles across batches.

Solution Workflow:

Methodology:

- Root Cause Analysis: Characterize the impurity and the variable components in the feedstock using analytical techniques (e.g., HPLC-MS, GC-MS).

- Process Robustness:

- For Variable Composition: Implement a consistent pre-treatment protocol for the biomass (e.g., washing, standardized extraction) to create a more uniform starting material [27].

- For Catalytic Inefficiency: The new feedstock may contain functional groups that poison traditional catalysts. Screen specialized catalysts, such as biocatalysts (enzymes) or heterogeneous catalysts, which can offer superior selectivity and tolerance to feedstock variability under mild conditions [27] [29].

- For Reaction Conditions: Use Design of Experiments (DoE) to map the optimal reaction landscape (temperature, pH, time) that is robust to minor feedstock fluctuations. Implement Process Analytical Technology (PAT) for real-time monitoring and control [25] [27].

Problem: A "Benign by Design" Molecule Fails to Degrade in Real-World Conditions

Issue: A polymer designed for biodegradability in laboratory tests shows significant persistence in natural aquatic environments.

| Investigation Question | Experimental Protocol | Research Reagent Solutions |

|---|---|---|

| Is the lab test representative? | Compare standard lab biodegradation tests (e.g., OECD 301) with tests that more closely mimic the target environment (e.g., marine water, soil, or low-nutrient conditions) [28]. | Inoculum from relevant environment (e.g., seawater, river sediment); Controlled bioreactors for simulated environments. |

| What are the degradation products? | Conduct an advanced degradation study to identify and quantify breakdown products over time using LC-MS or GC-MS. Assess the toxicity of these products [28]. | Analytical standards for suspected metabolites; Toxicity testing kits (e.g., for aquatic organisms). |

| Is the molecular trigger accessible? | Re-evaluate the polymer's structure. The built-in ester bonds (for hydrolysis) may be inaccessible to microbial enzymes. Investigate the addition of biosurfactants to improve bioavailability or redesign the polymer with more surface-exposed "weak links" [26] [28]. | Biosurfactants (e.g., rhamnolipids); Monomers for polymer redesign (e.g., with hydrophilic segments). |

The Scientist's Toolkit: Essential Reagents & Digital Tools for Safer Design

| Tool / Reagent Category | Function in Lifecycle-Driven Design | Specific Examples |

|---|---|---|

| Digital Molecular Design | Generates and optimizes molecular structures for desired performance and environmental properties in silico [25] [26]. | Computer-Aided Molecular Design (CAMD) platforms; AI-based toxicity & biodegradability predictors; Digital Twins for process simulation [25] [26]. |

| Green Solvents | Replaces hazardous auxiliary substances, reducing VOC emissions and workplace hazards [27] [29]. | Water-based systems; Ionic liquids; Supercritical COâ‚‚; Bio-based solvents (e.g., limonene from citrus peels) [27] [29]. |

| Advanced Catalysts | Increases reaction efficiency, reduces energy requirements, and minimizes waste through high selectivity [27] [29]. | Biocatalysts (engineered enzymes); Heterogeneous catalysts (zeolites, supported metal nanoparticles) [27] [29]. |

| Renewable Building Blocks | Shifts sourcing from fossil-based to bio-based feedstocks, reducing carbon footprint and enabling biodegradability [25] [27]. | Platform chemicals: Lactic acid, succinic acid (via fermentation). Polymers: Polylactic acid (PLA). Surfactants: Sugar-based alkyl polyglucosides [26] [27]. |

| Analytical & Metrics | Provides data to measure and validate environmental and efficiency gains against defined KPIs [25] [27]. | LCA Software; Tools for calculating E-factor, PMI, Atom Economy; Real-time PAT sensors [25] [27]. |

| 2-Butoxyethyl oleate | 2-Butoxyethyl oleate, CAS:109-39-7, MF:C24H46O3, MW:382.6 g/mol | Chemical Reagent |

| Dimethiodal Sodium | Dimethiodal Sodium|RUO Radiopaque Contrast Medium | Dimethiodal Sodium is a historical iodinated contrast agent for research use only (RUO). It is not for diagnostic, therapeutic, or personal use. Explore its applications. |

Experimental Protocol: A Tiered Workflow for Integrating Lifecycle Assessment into Molecular Design

This protocol provides a step-by-step methodology for embedding lifecycle thinking into your R&D process.

Diagram: Tiered Molecular Design Workflow

Step-by-Step Methodology:

Tier 1: In Silico Design & Screening

- Define Constraints: Before synthesis, establish clear, quantitative boundaries for your molecule. These must include:

- Performance Constraints: e.g., Target CMC for a surfactant, specific binding affinity for an API.

- Hazard Constraints: e.g., Predicted toxicity below a certain threshold, non-bioaccumulative.

- Fate Constraints: e.g., Ready biodegradability per OECD criteria or designed for specific recycling pathways [26] [28].

- Generate Candidates: Use a Computer-Aided Molecular Design (CAMD) framework. This involves formulating an optimization problem to generate molecular structures that meet your constraints [26].

- Predict Properties: Employ data-driven predictive models (QSPRs, AI/ML models) integrated into the CAMD platform to estimate critical properties for the candidate molecules, such as CMC, Krafft point, toxicity, and biodegradability [25] [26].

Tier 2: Benign Synthesis & Testing

- Route Selection: For the top in silico candidates, design a synthesis route that adheres to green chemistry principles. Prioritize atom economy and waste prevention [27] [29].

- Material Selection: Refer to your solvent selection guide. Choose safer solvents (water, ethanol, supercritical COâ‚‚) and catalytic reagents (enzymes, heterogeneous catalysts) over stoichiometric and hazardous ones [27] [29].

- Energy Efficiency: Consider microwave-assisted or ultrasound-assisted synthesis to reduce reaction times and energy consumption [29].

- Lab Validation: Synthesize the lead candidate and experimentally validate its key performance and hazard properties (e.g., efficacy, measured toxicity, biodegradability).

Tier 3: Lifecycle & Circularity Assessment

- Preliminary LCA: Conduct a streamlined Lifecycle Assessment on your lead candidate. Model the environmental impact from raw material extraction (sourcing) through production. This helps identify hotspots like high energy consumption or problematic waste streams early on [25].

- Design for End-of-Life: Based on the LCA and application, make a conscious design choice for the molecule's end-of-life.

Per- and polyfluoroalkyl substances (PFAS) are a group of manufactured chemicals that have been used in industry and consumer products since the 1940s due to their useful properties, including stain and water resistance [30]. There are thousands of different PFAS, with perfluorooctanoic acid (PFOA) and perfluorooctane sulfonate (PFOS) being among the most widely used and studied [30]. A key characteristic of concern is that many PFAS break down very slowly and can accumulate in people, animals, and the environment over time [30].

The history of PFAS demonstrates critical failures in early hazard identification. Although PFAS have been produced since the 1950s, academic research on environmental health aspects only appeared significantly later [31]. Early evidence of toxicity, including a 1978 monkey study that showed immunotoxicity and a 1992 medical thesis finding decreased leukocyte counts in exposed workers, was not widely disseminated or published [31]. This delayed discovery and intervention allowed these persistent chemicals to accumulate globally, creating a substantial public health and environmental challenge that persists today [31].

Technical Support & Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: What are the primary human health concerns associated with PFAS exposure that our toxicological screening should target? Current scientific research suggests that exposure to certain PFAS may lead to:

- Reproductive effects (decreased fertility, increased high blood pressure in pregnant women) [30]

- Developmental effects in children (low birth weight, accelerated puberty, behavioral changes) [30]

- Increased risk of certain cancers (prostate, kidney, testicular) [30]

- Reduced immune system function, including reduced vaccine response [30]

- Endocrine disruption and increased cholesterol levels [30]

Q2: Why should we prioritize cardiotoxicity screening for novel chemicals? Cardiovascular disease is a leading public health burden worldwide, and environmental risk factors contribute significantly to this burden [32]. Recent research using human-induced pluripotent stem cell (iPSC)-derived cardiomyocytes has demonstrated that many PFAS affect these cells, with 46 out of 56 tested PFAS showing concentration-response effects in at least one phenotype and donor [32]. This indicates cardiotoxicity is a likely human health concern for this class of chemicals.

Q3: What are the key limitations of traditional toxicology approaches that we should overcome with new methods? Traditional approaches face several challenges:

- Thousands of PFAS exist with varying effects and toxicity levels, yet most studies focus on a limited number [30]

- People can be exposed in different ways and at different life stages [30]

- Chemical uses change over time, making tracking and assessment challenging [30]

- Epidemiological studies and animal testing don't scale to hundreds of PFAS produced in large volumes [32]

Q4: How can we effectively screen for potential immunotoxicity during early development? Immunotoxicity is a well-documented endpoint for PFAS. Recommended approaches include:

- Cell-based assays to test various PFAS in a time- and resource-efficient manner [32]

- Evaluating antibody responses to vaccinations as a sensitive endpoint [31]

- Assessing immune cell populations and function in exposed models [31]

- Considering vulnerable life stages, particularly early development [31]

Common Experimental Challenges & Solutions

Table 1: Troubleshooting Guide for PFAS Toxicity Screening

| Challenge | Potential Cause | Solution |

|---|---|---|

| Incomplete hazard characterization | Limited focus on few PFAS compounds | Implement broader screening using high-throughput methods [32] |

| High inter-individual variability in responses | Genetic and biological differences in human population | Use population-based human in vitro models with multiple donors [32] |

| Missed cardiotoxicity signals | Inadequate cardiac-specific endpoints | Incorporate human iPSC-derived cardiomyocytes and functional measurements [32] |

| Poor detection of novel PFAS | Limitations of targeted analytical methods | Implement non-targeted analysis using high-resolution mass spectrometry [33] |

Experimental Protocols & Methodologies

Population-Based Cardiotoxicity Screening Using Human iPSC-Derived Cardiomyocytes

Background and Application This protocol enables characterization of potential human cardiotoxic hazard, risk, and inter-individual variability in responses to PFAS and other emerging contaminants. It uses human induced pluripotent stem cell (iPSC)-derived cardiomyocytes from multiple donors to quantify population variability [32].

Materials and Reagents

- Human iPSC-derived cardiomyocytes from multiple donors (recommended: 16+ donors representing both sexes and diverse ancestral backgrounds) [32]

- Plating and maintenance media for iPSC-derived cardiomyocytes

- Penicillin-streptomycin

- Hoechst 33342 for nuclear staining

- MitoTracker Orange for mitochondrial staining

- EarlyTox Cardiotoxicity Assay Kit

- Tissue-culture treated 384-well black/clear bottom plates

- Test compounds (PFAS) in DMSO

- Positive controls: Isoproterenol, propranolol, sotalol

Procedure

- Cell Culture and Plating: Maintain human iPSC-derived cardiomyocytes according to manufacturer specifications. Plate cells in tissue-culture treated 384-well black/clear bottom plates at appropriate density.

- Compound Treatment: Prepare concentration-response curves of PFAS compounds (typically 56 or more from different subclasses). Include appropriate vehicle controls and positive controls.

- Assay Implementation:

- For kinetic calcium flux measurements: Use the EarlyTox Cardiotoxicity Assay Kit according to manufacturer instructions.

- For high-content imaging: Fix cells and stain with Hoechst 33342 and MitoTracker Orange.

- Endpoint Measurement:

- Measure beat frequency using kinetic calcium flux

- Assess repolarization parameters

- Evaluate cytotoxicity through high-content imaging

- Data Analysis: Analyze concentration-response effects across multiple donors. Quantify inter-individual variability and calculate margins of exposure based on available exposure information.

Technical Notes

- Select cell lines from donors with no known history of cardiovascular disease to represent "healthy" population variability [32]

- Include equal representation of males and females and diverse ancestral backgrounds [32]

- For estimating inter-individual variability, cohorts of approximately 20 donors are recommended [32]

Non-Targeted Analysis for Novel PFAS Discovery Using High-Resolution Mass Spectrometry

Background and Application This methodology addresses the critical need to identify previously unrecognized PFAS compounds in environmental and biological media, overcoming limitations of targeted methods that cover only a fraction of known PFAS [33].

Materials and Equipment

- High-resolution mass spectrometer (QTOF or Orbitrap)

- Liquid chromatography system

- Appropriate LC columns for PFAS separation

- Solvents: LC-MS grade water, methanol, acetonitrile

- Ammonium acetate or formate for mobile phase additives

Procedure

- Sample Preparation: Process environmental or biological samples using appropriate extraction techniques for broad-range PFAS recovery.

- LC-HRMS Analysis:

- Perform chromatographic separation using methods capable of capturing diverse PFAS chemistries

- Acquire data in both positive and negative electrospray ionization modes to capture ionic, volatile, and non-ionic PFAS

- Data Processing:

- Utilize characteristic PFAS features for discovery:

- Mass defect analysis: Filter for compounds within characteristic PFAS mass defect range

- Homologous series screening: Identify patterns of repeating -CF2- units (Δm = 49.9968)

- Diagnostic fragmentation: Monitor for characteristic fragments (e.g., m/z 118.992, 168.988)

- Utilize characteristic PFAS features for discovery:

- Compound Identification: Use accurate mass, isotopic patterns, and fragmentation spectra to propose structures for novel PFAS.

Technical Notes

- Non-targeted analysis is particularly valuable for identifying replacement PFAS chemistries developed after phase-outs of legacy compounds [33]

- This approach has revealed 700+ structurally defined PFAS compounds and can detect overlooked PFAS near production facilities [33]

- Always confirm findings with authentic standards when possible

Data Presentation & Analysis

Quantitative Cardiotoxicity Data for PFAS Subclasses

Table 2: Cardiotoxicity Effects of PFAS Subclasses in Human iPSC-Derived Cardiomyocytes [32]

| PFAS Subclass | Number Tested | Number with Effects | Primary Phenotypes Affected | Inter-Individual Variability |

|---|---|---|---|---|

| Perfluoroalkyl acids | 15 | 13 | Beat frequency, repolarization | Moderate to high (within 10-fold) |

| Fluorotelomer-based | 12 | 10 | Beat frequency, cytotoxicity | Moderate (within 5-8 fold) |

| Polyfluoroether alternatives | 9 | 8 | Repolarization, beat frequency | High (up to 10-fold) |

| Other subclasses | 20 | 15 | Various phenotypes | Low to moderate |

Immunotoxicity Benchmark Doses

Table 3: Immunotoxicity Benchmark Dose Levels for PFAS Based on Vaccine Antibody Responses [31]

| PFAS Compound | Study Population | BMDL (μg/L serum) | Effect on Immune System |

|---|---|---|---|

| PFOS | Children (vaccine antibodies) | ~1 | 50% decrease in specific vaccine antibody concentration |

| PFOA | Children (vaccine antibodies) | ~1 | Reduced antibody titer rise after vaccination |

| PFOS | Adults (influenza vaccination) | ~1 | Reduced antibody response, particularly to A influenza strain |

| Multiple PFAS | Occupational | Not calculated | Decreased leukocyte counts, altered lymphocyte numbers |

Visualization: Experimental Workflows & Signaling Pathways

PFAS Hazard Identification Workflow

PFAS Cardiotoxicity Screening Protocol

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Research Tools for Early Hazard Identification of Industrial Chemicals

| Research Tool | Function | Application in PFAS Research |

|---|---|---|

| Human iPSC-derived cardiomyocytes | Models human cardiac tissue for toxicity screening | Quantifying cardiotoxicity and inter-individual variability of PFAS [32] |

| High-resolution mass spectrometry (QTOF, Orbitrap) | Non-targeted analysis for novel chemical discovery | Identifying previously unrecognized PFAS in environmental samples [33] |

| Kinetic calcium flux assays | Functional measurement of cardiomyocyte beating | Assessing PFAS effects on cardiac beat frequency and regularity [32] |

| Vaccine response models | Sensitive immunotoxicity testing | Demonstrating reduced antibody production from PFAS exposure [31] |

| Population-based in vitro models | Quantification of human variability | Determining range of susceptibility across diverse genetic backgrounds [32] |

| GreenScreen for Safer Chemicals | Hazard assessment framework | Evaluating and certifying safer chemical alternatives [34] |

| Cyclomethycaine | Cyclomethycaine, CAS:139-62-8, MF:C22H33NO3, MW:359.5 g/mol | Chemical Reagent |

| 2-Hexylthiophene | 2-Hexylthiophene, CAS:18794-77-9, MF:C10H16S, MW:168.30 g/mol | Chemical Reagent |

The PFAS case study demonstrates the critical need for proactive hazard identification before chemicals become widespread environmental contaminants. By implementing the experimental approaches and troubleshooting guides outlined in this technical resource, researchers can:

- Identify potential hazards early in chemical development using human-relevant in vitro models

- Quantify inter-individual variability in responses to address population susceptibility

- Employ non-targeted analytical methods to discover novel compounds of concern

- Apply data-driven approaches to design safer alternatives that maintain functionality while reducing toxicity

This framework supports the transition to sustainable materials and chemicals that are "benign-by-design," incorporating safety considerations at the earliest stages of development rather than as a retrospective response to contamination [34].

Frameworks and Tools for Safer Molecular Design

Implementing the Safe and Sustainable by Design (SSbD) Framework

This technical support center provides troubleshooting guides and FAQs to help researchers, scientists, and drug development professionals navigate the implementation of the Safe and Sustainable by Design (SSbD) Framework. The European Commission describes SSbD as a pre-market approach that integrates safety and sustainability considerations along a product's entire lifecycle to steer innovation and protect human health and the environment [35] [36].

Troubleshooting Guides

Guide 1: Addressing Common Hurdles in Early-Stage SSbD Application

Problem: Difficulty applying SSbD assessments to innovations at low Technology Readiness Levels (TRLs) or early-stage research [35].

- Symptom: Lack of definitive data for comprehensive safety or sustainability assessments during initial molecular design.

- Underlying Cause: The SSbD framework requires forward-looking assessments, but early-stage research inherently involves uncertainty regarding the final chemical's properties and full lifecycle impacts [36].

- Solution: Implement a iterative "Scoping Analysis" as suggested in the revised SSbD Framework [35].

- Define Functional Need: Start by clearly articulating the desired function of the chemical, separate from any specific molecular structure [37].

- Identify Potential Hazards: Use in silico tools (e.g., QSAR models, computational toxicology) to screen proposed molecular structures for known structural alerts associated with toxicity [24] [37] [38].

- Benchmark Against Alternatives: Compare the preliminary hazard profile of your design against existing chemicals that perform the same function.

- Refine Molecular Design: Use the data from steps 2 and 3 to rationally redesign the molecule, for example, by modifying or eliminating hazardous functional groups to reduce toxicity while maintaining efficacy [37] [39].

Problem: Challenges in accessing or generating the data required for the SSbD assessment.

- Symptom: Inability to complete all sections of the SSbD framework due to data gaps, particularly for environmental footprint or social sustainability aspects.

- Underlying Cause: The necessary data may be proprietary, not yet exist for novel chemicals, or require specialized expertise to generate [36].

- Solution: Adopt a tiered approach to data generation and leverage New Approach Methodologies (NAMs) [38].

- Prioritize Data Gaps: Focus first on generating data for the hazards and exposures deemed most critical for your specific chemical and application.

- Utilize NAMs: Incorporate data from in vitro assays, high-throughput screening, and computational models to fill initial data gaps more quickly and cost-effectively than traditional testing alone [38].

- Engage in Collaboration: Seek partnerships across the value chain or with academia to share data and best practices, a key factor in accelerating the SSbD transition [36].

Guide 2: Overcoming Organizational and Technical Barriers

Problem: Internal organizational silos hinder the interdisciplinary collaboration required for SSbD [36].

- Symptom: Misalignment between R&D, sustainability, and business departments, leading to a fragmented application of the SSbD principles.

- Underlying Cause: Traditional corporate structures often lack the processes for systematic information sharing between these functions [36].

- Solution: Foster internal bridges and institutionalize SSbD knowledge.

- Form a Cross-Functional Team: Create a working group with representatives from R&D, toxicology, environmental health and safety (EHS), and sustainability.

- Develop Shared Tools: Implement a centralized, accessible database for chemical hazard information, assessment results, and design choices made during the R&D process [36].

- Establish Design Guidelines: Create and adopt internal rational molecular design guidelines for reduced toxicity, based on published literature and past project learnings [37].

Problem: Practical difficulties in performing a unified safety assessment that covers the entire chemical lifecycle.

- Symptom: Uncertainty in how to assess and manage occupational health risks for a novel chemical designed with SSbD principles [38].

- Underlying Cause: Next Generation Risk Assessment (NGRA), which is exposure-led and hypothesis-driven, is not yet standard practice in occupational settings [38].

- Solution: Integrate NGRA and occupational safety principles early in the design process.

- Anticipate Exposure Scenarios: During the molecular design phase, hypothesize potential occupational exposure scenarios during manufacturing, purification, and handling.

- Apply NAMs for Occupational Health: Use in silico and in vitro data to inform initial occupational risk characterizations, focusing on relevant endpoints like dermal sensitization or respiratory toxicity [38].

- Design for Safety: Incorporate controls directly into the molecular design or process design where possible (e.g., designing a chemical with lower vapor pressure to reduce inhalation exposure) [39].

Frequently Asked Questions (FAQs)

Q1: What is the core objective of the revised EU SSbD Framework? The core objective is to serve as a voluntary decision-support tool that steers industrial innovation towards producing safer and more sustainable chemicals and materials. It aims to protect human health and the environment throughout the product's lifecycle while also strengthening the EU's industrial competitiveness [35] [36].

Q2: How does "Rational Molecular Design" support the goals of the SSbD Framework? Rational Molecular Design is the practical application of SSbD at the molecular level. It involves using empirical data, mechanistic studies, and computational methods (like QSAR and AI) to intentionally design chemical structures that are less toxic to humans and the environment from the outset. This directly fulfills the SSbD goal of minimizing hazards and pollution at the design stage [37] [39].

Q3: My research is at a very early stage (low TRL). Is the SSbD Framework still relevant? Yes. The revised Framework is designed to accommodate innovations at different stages of maturity. For early-stage research, starting with the "Scoping Analysis" is recommended. This involves using computational tools for initial hazard screening and thinking critically about the chemical's functional purpose, which allows you to integrate SSbD principles from the very beginning, even with limited data [35].

Q4: What are "New Approach Methodologies (NAMs)" and why are they important for SSbD? NAMs are a broad range of non-animal and computational methods (e.g., in vitro assays, computational toxicology, omics technologies) used for chemical safety assessment. They are crucial for SSbD because they can generate human-relevant safety data faster and for a larger number of chemicals at early development stages, supporting the NGRA approach that is central to operationalizing the SSbD framework [38].

Q5: Can you provide a real-world example of successful safer chemical design? A classic example is the development of the "Sea-Nine" antifoulant by Rohm and Haas. The company intentionally designed a new molecule to replace persistent and highly toxic organotin compounds (like TBT) used on ship hulls. They tested over 140 compounds to ensure the selected molecule was effective yet would rapidly degrade in the marine environment, thus significantly reducing ecological toxicity [37].

Experimental Protocols & Data

Key SSbD Assessment Workflow

The diagram below outlines a generalized workflow for integrating SSbD assessments into the chemical development process.

Quantitative Data for Safer Chemical Design

The following table summarizes key property guidelines that can be used during rational molecular design to increase the probability of reduced toxicity.

Table 1: Property Guidelines for Reduced Aquatic Toxicity in Chemical Design [37]

| Property | Target for Reduced Acute Toxicity | Rationale & Experimental/Computational Method |

|---|---|---|

| Log P (Octanol-Water Partition Coefficient) | < 5 | Lower log P indicates lower potential for bioaccumulation in fatty tissues. Can be determined via shake-flask method or predicted using computational tools (e.g., EPI Suite). |

| Water Solubility | > 1 mg/L | Higher solubility generally correlates with lower potential for bioaccumulation and greater dilution in the environment. Measured experimentally or predicted via QSAR models. |

| Molecular Weight (MW) | < 1000 g/mol | Larger molecules have reduced potential for bioavailability and passive diffusion across biological membranes. A straightforward calculation from the chemical structure. |

| Reactive Functional Groups | Absence of epoxides, isocyanates, etc. | These groups can cause direct alkylation of proteins or DNA, leading to toxicity. Structure-based analysis and in chemico assays (e.g., for skin sensitization). |

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Research Reagents and Tools for SSbD Investigations

| Item | Function in SSbD Context |

|---|---|

| QSAR Software | Uses computational models to predict key toxicity endpoints and physicochemical properties (e.g., Log P) based on molecular structure, enabling virtual screening of candidate molecules [24] [37]. |

| In vitro Assay Kits | Provide high-throughput, human biology-based tools for testing specific toxicity pathways (e.g., cytotoxicity, endocrine disruption) without animal testing, aligning with the use of NAMs [38]. |

| Safer Solvents (e.g., water, ethanol, supercritical COâ‚‚) | Replace traditional toxic solvents (e.g., benzene) in synthesis and formulation to immediately reduce hazards for workers and the environment, a core principle of green chemistry and SSbD [39]. |

| Biocatalysts (Enzymes) | Offer highly specific and efficient catalysts for synthesis, operating under milder conditions and often reducing the need for hazardous reagents and energy consumption [39]. |

| 6,7-Quinoxalinediol | 6,7-Quinoxalinediol, CAS:19506-20-8, MF:C8H6N2O2, MW:162.15 g/mol |

| Hex-2-en-3-ol | Hex-2-en-3-ol, CAS:16239-11-5, MF:C6H12O, MW:100.16 g/mol |

Leveraging New Approach Methodologies (NAMs) for Faster Toxicity Screening

New Approach Methodologies (NAMs) are defined as any technology, methodology, approach, or combination thereof that can be used to replace, reduce, or refine traditional animal toxicity testing. These include computer-based (in silico) models, modernized whole-organism assays, and assays with biological molecules, cells, tissues, or organs [40]. The primary drivers for NAMs adoption include ethical concerns about animal testing, the need for higher-throughput screening methods, and the desire for data with greater human biological relevance [40].

Within the context of safer chemical design, NAMs enable researchers to understand the mechanisms underpinning adverse effects and identify doses below which effects are not expected to occur [40]. This proactive approach facilitates the design of inherently safer chemicals by providing early toxicity insights during the development process.

FAQs: Implementing NAMs in Research

FAQ 1: What are the primary categories of NAMs, and how are they applied in toxicity screening?

NAMs encompass several technological categories that can be used individually or in integrated approaches. In silico methods include computational models, (Q)SAR predictions, and artificial intelligence systems that simulate chemical interactions with biological targets [41] [42]. In vitro methods utilize human cells, 3D tissue models, and high-throughput screening assays like EPA's ToxCast, which provides bioactivity data for nearly 10,000 substances [43] [44]. The emerging category of "omics" technologies identifies molecular changes caused by chemical exposures [40].

FAQ 2: How can I access and use the major public NAMs databases and tools?